filmov

tv

Least Squares as a Maximum Likelihood estimator

Показать описание

This video explains how Ordinary Least Squares regression can be regarded as an example of Maximum Likelihood estimation.

Least Squares as a Maximum Likelihood estimator

Least Squares vs Maximum Likelihood

MLE vs OLS | Maximum likelihood vs least squares in linear regression

GLM Intro - 2 - Least Squares vs. Maximum Likelihood

Deriving Linear Least Squares with Maximum Likelihood

Maximum Likelihood Hypothesis and Least Squared Error Hypothesis by Mahesh Huddar

Deep Learning Lecture 2.2 - Linear Least Squares

#41 Maximum Likelihood & Least Squared Error Hypothesis |ML|

2023 IEB MATHEMATICS PAPER 2 | QUESTION 1 | STATISTICS | LEAST SQUARES REGRESSION LINE EQUATION

Simple Linear Regression MLE are the same as LSE

Maximum Likelihood, clearly explained!!!

Least squares comparison with Maximum Likelihood - proof that OLS is BUE

Least Squares - 5 Minutes with Cyrill

Deriving the least squares estimators of the slope and intercept (simple linear regression)

Linear Least Squares to Solve Nonlinear Problems

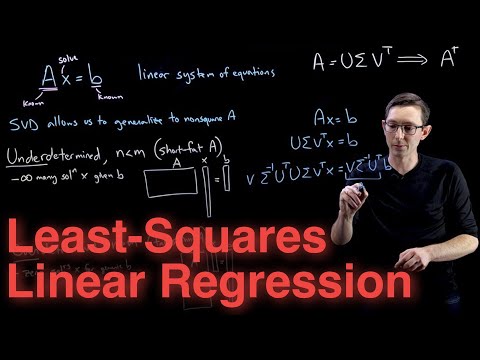

Linear Systems of Equations, Least Squares Regression, Pseudoinverse

noc18-ee31-Lec 41 | Applied Optimization | Least Squares problem | IIT Kanpur

3.1.1 & 3.1.3: Maximum likelihood and least squares, and sequential learning

Maximum Likelihood Hypothesis | Least squared Error | Gaussian Distribution by Mahesh Huddar

Lec 05: Least Squares and Maximum likelihood Estimation of Parameters

6840-09-21-5: Linear models - Least square estimator as MLE

Derivation of Recursive Least Squares Method from Scratch - Introduction to Kalman Filter

Maximum Likelihood : Data Science Concepts

Least Squares Estimators - in summary

Комментарии

0:07:38

0:07:38

0:04:49

0:04:49

0:13:48

0:13:48

0:09:02

0:09:02

0:14:38

0:14:38

0:09:12

0:09:12

0:21:28

0:21:28

0:09:46

0:09:46

0:09:36

0:09:36

0:09:52

0:09:52

0:06:12

0:06:12

0:04:35

0:04:35

0:05:18

0:05:18

0:12:13

0:12:13

0:12:27

0:12:27

0:11:53

0:11:53

0:19:48

0:19:48

0:38:12

0:38:12

0:09:08

0:09:08

1:01:01

1:01:01

0:07:38

0:07:38

0:34:20

0:34:20

0:20:45

0:20:45

0:04:52

0:04:52