filmov

tv

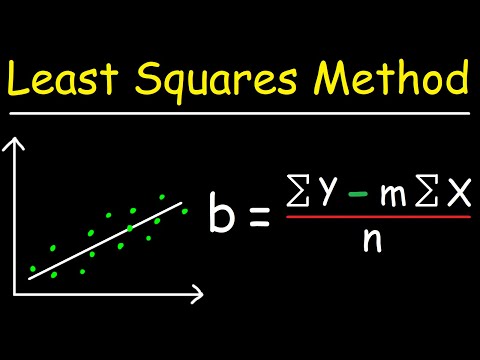

Deriving the least squares estimators of the slope and intercept (simple linear regression)

Показать описание

I derive the least squares estimators of the slope and intercept in simple linear regression (Using summation notation, and no matrices.) I assume that the viewer has already been introduced to the linear regression model, but I do provide a brief review in the first few minutes. I assume that you have a basic knowledge of differential calculus, including the power rule and the chain rule.

If you are already familiar with the problem, and you are just looking for help with the mathematics of the derivation, the derivation starts at 3:26.

At the end of the video, I illustrate that sum(X_i-X bar)(Y_i - Y bar) = sum X_i(Y_i - Y bar) =sum Y_i(X_i - X bar) , and that sum(X_i-X bar)^2 = sum X_i(X_i - X bar).

There are, of course, a number of ways of expressing the formula for the slope estimator, and I make no attempt to list them all in this video.

If you are already familiar with the problem, and you are just looking for help with the mathematics of the derivation, the derivation starts at 3:26.

At the end of the video, I illustrate that sum(X_i-X bar)(Y_i - Y bar) = sum X_i(Y_i - Y bar) =sum Y_i(X_i - X bar) , and that sum(X_i-X bar)^2 = sum X_i(X_i - X bar).

There are, of course, a number of ways of expressing the formula for the slope estimator, and I make no attempt to list them all in this video.

Deriving the least squares estimators of the slope and intercept (simple linear regression)

Ordinary Least Squares Estimators - derivation in matrix form - part 1

Deriving Least Squares Estimators - part 1

Least Squares Estimate (Derivation) | Simple Linear Regression

Least Squares Estimators - in summary

Deriving the OLS Estimators in Simple Linear Regression Model - Part 1

The Least Squares Formula: A Derivation

Find the Value of OLS estimators Linear Regression Model | Mathematical Economics | Ecoholics

Level 2 - Quantitative Methods & Derivatives

ECO375F - 1.0 - Derivation of the OLS Estimator

Least Square Estimators - Explaining and deriving

Deriving the mean and variance of the least squares slope estimator in simple linear regression

Multiple Linear Regression Least Squares Estimator

Ordinary Least Squares Estimators - derivation in matrix form - part 2

Simple Linear Regression Derivation of OLS Estimators

Derivation of OLS coefficients

Deriving Least Squares Estimators - part 2

Linear Regression Using Least Squares Method - Line of Best Fit Equation

Deriving the least squares regression estimators

Derivation of Recursive Least Squares Method from Scratch - Introduction to Kalman Filter

Deriving Least Squares Estimators - part 3

Deriving the OLS. Ordinary Least Squares. #economics #econometrics #economist

Deriving Least Squares Estimators - part 4

Least Square Estimators - Variance of Estimators, b0 and b1, Proof

Комментарии

0:12:13

0:12:13

0:07:30

0:07:30

0:05:02

0:05:02

0:13:00

0:13:00

0:04:52

0:04:52

0:16:23

0:16:23

0:10:31

0:10:31

0:08:56

0:08:56

3:00:47

3:00:47

0:32:03

0:32:03

0:20:38

0:20:38

0:10:54

0:10:54

0:06:39

0:06:39

0:07:48

0:07:48

0:25:13

0:25:13

0:13:35

0:13:35

0:06:07

0:06:07

0:15:05

0:15:05

0:14:39

0:14:39

0:34:20

0:34:20

0:04:16

0:04:16

0:06:17

0:06:17

0:03:16

0:03:16

0:30:11

0:30:11