filmov

tv

Linear Least Squares to Solve Nonlinear Problems

Показать описание

Ever wondered how Excel comes up with those neat trendlines? Here's the the theory so you can model your data however you like! #SoME1

Least squares approximation | Linear Algebra | Khan Academy

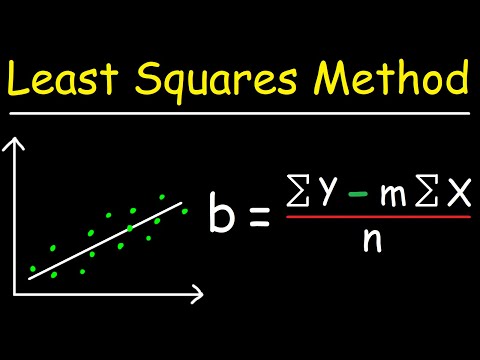

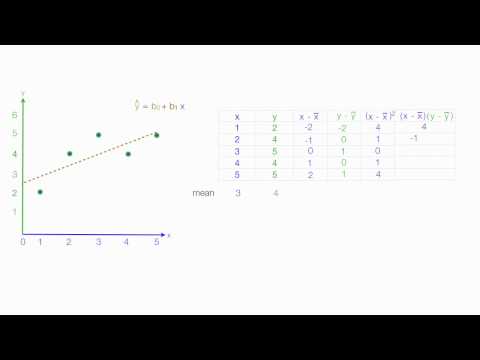

Linear Regression Using Least Squares Method - Line of Best Fit Equation

Linear Algebra 6.5.1 Least Squares Problems

Linear Least Squares to Solve Nonlinear Problems

Least squares using matrices | Lecture 26 | Matrix Algebra for Engineers

Determine a Least Squares Solutions to Ax=b

Least Squares Approximation

9. Four Ways to Solve Least Squares Problems

Linear Systems of Equations, Least Squares Regression, Pseudoinverse

The Least Squares Formula: A Derivation

Linear Least Squares

Least squares examples | Alternate coordinate systems (bases) | Linear Algebra | Khan Academy

Least Squares Approximation

Deriving the least squares estimators of the slope and intercept (simple linear regression)

Linear Regression Least Squares Method

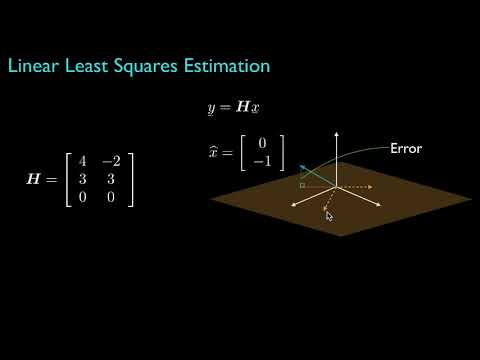

Linear Least Squares Estimation

Calculating the Least Squares Regression Line by Hand

How to calculate linear regression using least square method

Harvard AM205 video 1.6 - Linear least squares

Least Squares Approximations

11.3.6 Solving the Linear Least-squares Problem Via QR Factorization

Least Square Solution of a Given System of Linear equations

Example 1: Finding a least squares solution of an inconsistent system

Nonlinear Least Squares

Комментарии

0:15:32

0:15:32

0:15:05

0:15:05

0:18:27

0:18:27

0:12:27

0:12:27

0:10:15

0:10:15

0:06:28

0:06:28

0:07:52

0:07:52

0:49:51

0:49:51

0:11:53

0:11:53

0:10:31

0:10:31

0:12:27

0:12:27

0:18:50

0:18:50

0:08:04

0:08:04

0:12:13

0:12:13

0:16:56

0:16:56

0:06:38

0:06:38

0:03:48

0:03:48

0:08:29

0:08:29

0:29:45

0:29:45

0:07:28

0:07:28

0:03:33

0:03:33

0:28:25

0:28:25

0:09:30

0:09:30

0:10:56

0:10:56