filmov

tv

Why do COMPUTERS get SLOWER with age?

Показать описание

Dave explores why computers slow down the longer we use them.

Why do Computers get Slower with Age? Top 5 fixes YOU can do!

Why do COMPUTERS get SLOWER with age?

Why do computers slow down? (And how to fix it)

Do Computers Get Slower Over Time?

Why Do Computers Get Slow Over Time?

Why do Computers get Slower with Age? Top 5 fixes YOU can do!

Fix Your Slow Computer - 5 Easy Steps - Windows 10 (2023)

Why Do Computers Get Slower? - ThioJoeTech

Why Does My PC Get Slower Over Time

Why Slower Computers Were Faster

How to make a slow computer fast again... for FREE!

Why do Computers Slow Down?

Why Windows is Slow?

Why do computers slow down with age? Fix it! Making My PC Like New FREE!

Why Do Computers Slow Down Over Time? TOP 5 Fixes!

How to Fix Your Slow Computer - 4 Easy Steps - Windows (2023)

How To Fix Windows 10 Lagging/Slow Problem [Quick Fix]

My System is Slow, Now What?

Why Your Computer is So Slow

Truth of Modernizing Your Desktop | Does it make your PC slower?

How to Fix Startup Issues and Slow Boot Time | PC Maintenance

Why Windows PC gets Slow Over Time and How to Fix it?

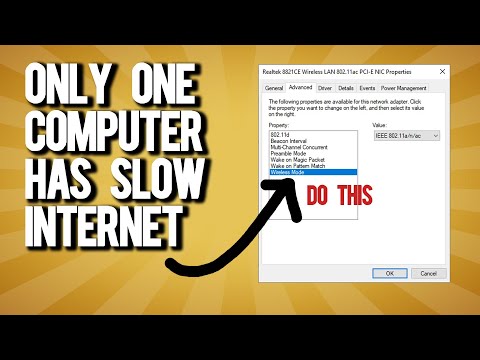

When only one computer has slow internet

Does Too Many Files On Your Desktop Slow Down Your PC

Комментарии

0:15:58

0:15:58

0:01:05

0:01:05

0:13:52

0:13:52

0:08:19

0:08:19

0:24:00

0:24:00

0:03:30

0:03:30

0:06:02

0:06:02

0:03:06

0:03:06

0:16:15

0:16:15

0:10:57

0:10:57

0:19:23

0:19:23

0:17:25

0:17:25

0:06:32

0:06:32

0:04:23

0:04:23

0:11:33

0:11:33

0:04:01

0:04:01

0:01:44

0:01:44

0:17:28

0:17:28

0:11:33

0:11:33

0:04:24

0:04:24

0:11:31

0:11:31

0:07:01

0:07:01

0:01:45

0:01:45

0:06:15

0:06:15