filmov

tv

What is Least Squares?

Показать описание

A quick introduction to Least Squares, a method for fitting a model, curve, or function to a set of data.

TRANSCRIPT

Hello, and welcome to Introduction to Optimization. This video provides a basic answer to the question, what is Least Squares?

Least squares is a technique for fitting an equation, line, curve, function, or model to a set of data. This simple technique has applications in many fields, from medicine to finance to chemistry to astronomy. Least squares helps us represent the real world using mathematical models.

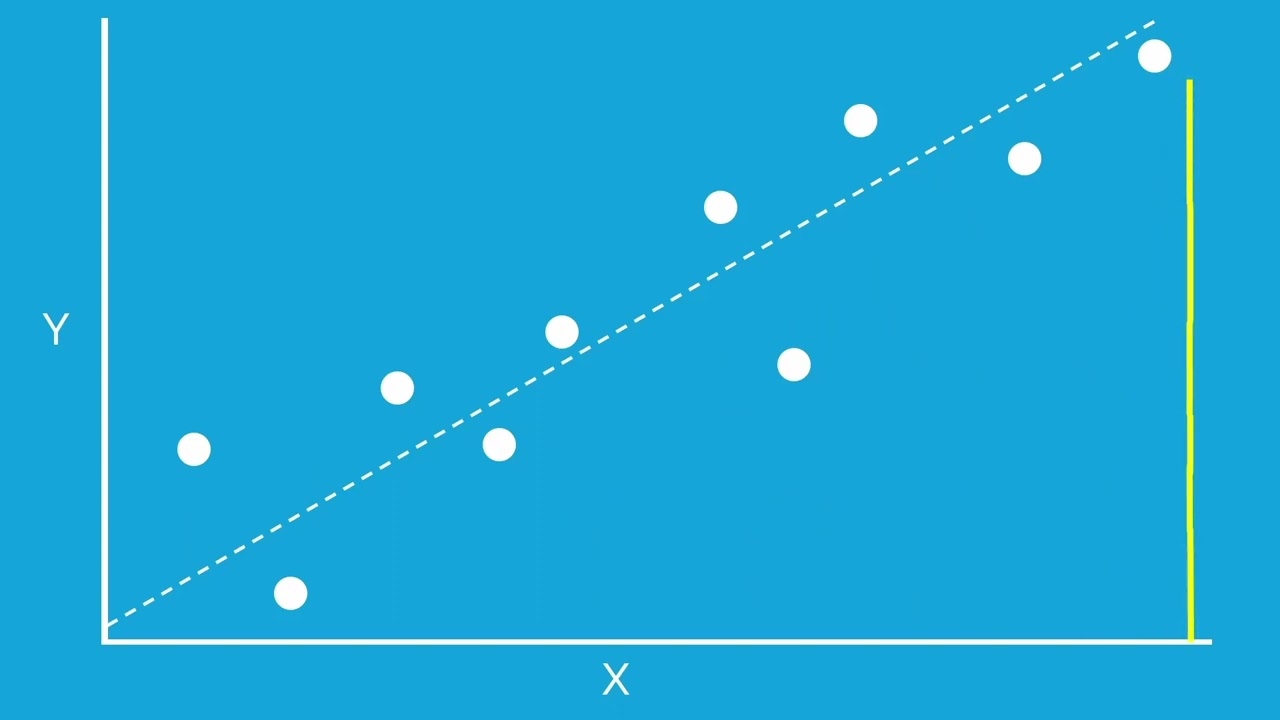

Let’s take a closer look at how this works. Imagine that you have a set of data points in x and y, and you want to find the line that best fits the data. This is also called regression. For a given x, you have the y value from your data and the y value predicted by the line. The difference between these values is called the error, or the residual.

This residual value is calculated between each of the data points and the line. To give only positive error values, the error is usually squared. Next the individual residuals are summed to give the total error between the data and the line, the sum of squared errors.

One way to think of least squares is as an optimization problem. The sum of squared errors is the objective function, and the optimizer is trying to find the slope and intercept of the line that best minimizes the error.

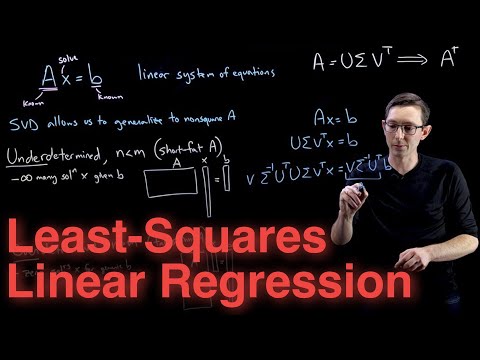

Here we’re trying to fit a line, which makes this a linear least-squares problem. Linear least squares has a closed form solution, and can be solved by solving a system of linear equations. It’s worth noting that other equations, such as parabolas and polynomials can also be fit using linear least squares, as long as the variables being optimized are linear.

Least squares can also be used for nonlinear curves and functions to fit more complex data. In this case the problem can be solved using a dedicated nonlinear least squares solver, or a general purpose optimization solver.

To summarize, least squares is a method often used for fitting a model to data. Residuals express the error between the current model fit and the data. The objective of least squares is to minimize the sum of the squared error across all the data points to find the best fit for a given model.

TRANSCRIPT

Hello, and welcome to Introduction to Optimization. This video provides a basic answer to the question, what is Least Squares?

Least squares is a technique for fitting an equation, line, curve, function, or model to a set of data. This simple technique has applications in many fields, from medicine to finance to chemistry to astronomy. Least squares helps us represent the real world using mathematical models.

Let’s take a closer look at how this works. Imagine that you have a set of data points in x and y, and you want to find the line that best fits the data. This is also called regression. For a given x, you have the y value from your data and the y value predicted by the line. The difference between these values is called the error, or the residual.

This residual value is calculated between each of the data points and the line. To give only positive error values, the error is usually squared. Next the individual residuals are summed to give the total error between the data and the line, the sum of squared errors.

One way to think of least squares is as an optimization problem. The sum of squared errors is the objective function, and the optimizer is trying to find the slope and intercept of the line that best minimizes the error.

Here we’re trying to fit a line, which makes this a linear least-squares problem. Linear least squares has a closed form solution, and can be solved by solving a system of linear equations. It’s worth noting that other equations, such as parabolas and polynomials can also be fit using linear least squares, as long as the variables being optimized are linear.

Least squares can also be used for nonlinear curves and functions to fit more complex data. In this case the problem can be solved using a dedicated nonlinear least squares solver, or a general purpose optimization solver.

To summarize, least squares is a method often used for fitting a model to data. Residuals express the error between the current model fit and the data. The objective of least squares is to minimize the sum of the squared error across all the data points to find the best fit for a given model.

Комментарии

0:02:43

0:02:43

0:15:05

0:15:05

0:07:39

0:07:39

0:15:32

0:15:32

0:07:28

0:07:28

0:14:31

0:14:31

0:05:18

0:05:18

0:10:15

0:10:15

0:12:49

0:12:49

0:02:10

0:02:10

0:12:27

0:12:27

0:10:31

0:10:31

0:04:49

0:04:49

0:03:34

0:03:34

0:08:04

0:08:04

0:11:53

0:11:53

0:04:52

0:04:52

0:12:13

0:12:13

0:06:56

0:06:56

0:07:24

0:07:24

0:07:49

0:07:49

0:49:51

0:49:51

0:06:28

0:06:28

0:09:58

0:09:58