filmov

tv

Least Squares Approximations

Показать описание

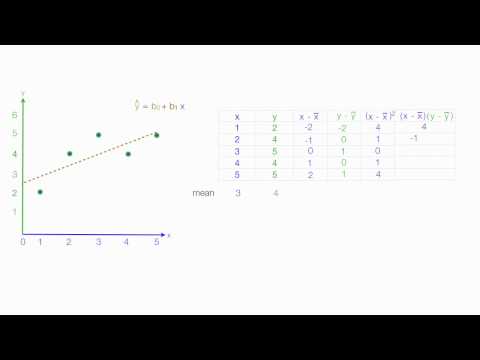

Description: We can't always solve Ax=b, but we use orthogonal projections to find the vector x such that Ax is closest to b.

This video is part of a Linear Algebra course taught at the University of Cincinnati.

BECOME A MEMBER:

MATH BOOKS & MERCH I LOVE:

This video is part of a Linear Algebra course taught at the University of Cincinnati.

BECOME A MEMBER:

MATH BOOKS & MERCH I LOVE:

Комментарии