filmov

tv

Linear Regression - Least Squares Criterion Part 1

Показать описание

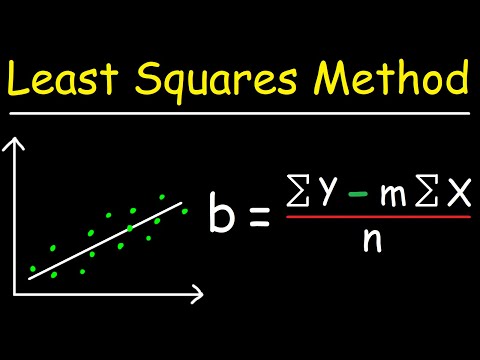

Linear Regression Using Least Squares Method - Line of Best Fit Equation

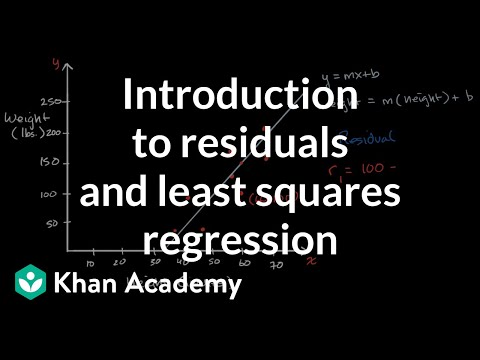

Introduction to residuals and least squares regression

What is Least Squares?

How to calculate linear regression using least square method

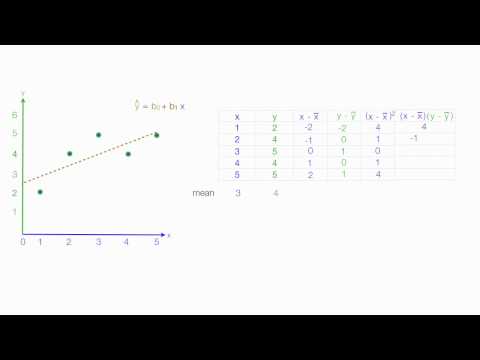

Simple Linear Regression: The Least Squares Regression Line

Deriving the least squares estimators of the slope and intercept (simple linear regression)

Linear Regression Least Squares Method

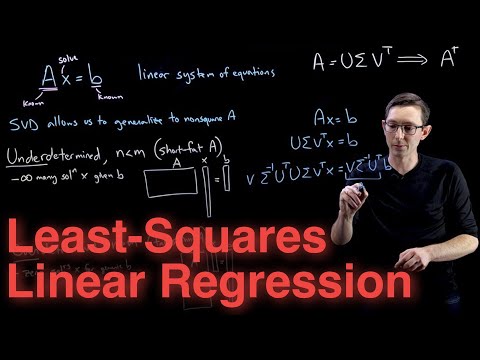

Linear Systems of Equations, Least Squares Regression, Pseudoinverse

Day-5 (25 August 2024): Regression Analysis Basics

Introduction to residuals and least-squares regression | AP Statistics | Khan Academy

Ordinary Least Squares regression or Linear regression

Least squares using matrices | Lecture 26 | Matrix Algebra for Engineers

Calculating the Least Squares Regression Line by Hand

Linear Regression in 2 minutes

Linear Regression - Least Squares Criterion Part 1

Linear Regression, Clearly Explained!!!

Linear Regression using Least Squares Method in Machine Learning Data mining and ML by Mahesh Huddar

Linear Least Squares to Solve Nonlinear Problems

Least squares approximation | Linear Algebra | Khan Academy

What is Simple Linear Regression in Statistics | Linear Regression Using Least Squares Method

Pearson's Correlation Coefficient (3 of 3: Least squares regression line)

Learn Statistical Regression in 40 mins! My best video ever. Legit.

Linear Regression, Clearly Explained!!!

Regression: Crash Course Statistics #32

Комментарии

0:15:05

0:15:05

0:07:39

0:07:39

0:02:43

0:02:43

0:08:29

0:08:29

0:07:24

0:07:24

0:12:13

0:12:13

0:16:56

0:16:56

0:11:53

0:11:53

1:52:26

1:52:26

0:04:49

0:04:49

0:02:10

0:02:10

0:10:15

0:10:15

0:03:48

0:03:48

0:02:34

0:02:34

0:06:56

0:06:56

0:27:27

0:27:27

0:06:29

0:06:29

0:12:27

0:12:27

0:15:32

0:15:32

0:13:04

0:13:04

0:09:58

0:09:58

0:40:25

0:40:25

0:27:27

0:27:27

0:12:40

0:12:40