filmov

tv

The Fastest Multiplication Algorithm

Показать описание

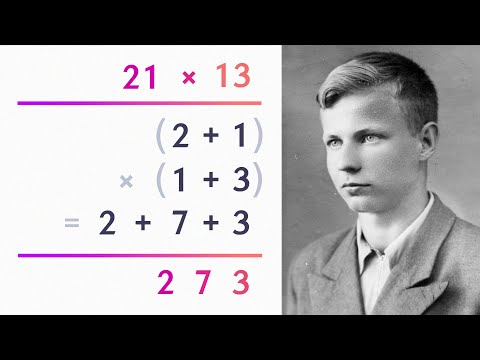

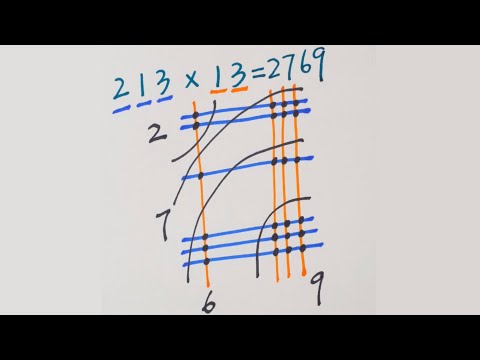

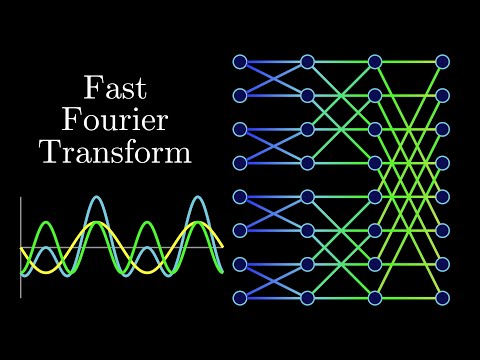

How can you multiply two enormous numbers? We all learn an approach in school which has n^2 total multiplications by single digit numbers. The first improvement was the Karatsuba Algorithm which uses a divide and conquer approach and a bit of clever algebra to reduce 4 multiplication to 3 (in 2x2 case) and more generally to about n^1.58 multiplications asymptotically. For a computer with a 32-bit hardware multiplier, one can iteratively apply the approach until the numbers are within the size of the multiplier. Toom-Cook is a generalization of the Karatsuba Algorithm and is useful in various cryptography applications, but a real change happened with Schonhage-Strassen which use discrete fast fourier transforms to get to O(n log n log log n) complexity, used for applications like the Great Internet Mersenne Prime search. The theoretical best result is Harvey and Hoeven who achieved O(n log n), albeit this becomes more efficient for only impractically large numbers.

0:00 Review of normal multiplication

1:42 The Karatsuba Algorithm for 2x2

3:32 Example of Karatsuba

4:34 Karatsuba for larger numbers

5:45 Complexity of Karatsuba for size 2^k

7:09 Computer architecture and hardware multipliers

8:26 Newer algorithms (Schonhage-Strassen, and Harvey and Hoeven)

Check out my MATH MERCH line in collaboration with Beautiful Equations

COURSE PLAYLISTS:

OTHER PLAYLISTS:

► Learning Math Series

►Cool Math Series:

BECOME A MEMBER:

MATH BOOKS I LOVE (affilliate link):

SOCIALS:

Комментарии

0:13:58

0:13:58

0:00:58

0:00:58

0:11:28

0:11:28

0:13:00

0:13:00

0:13:31

0:13:31

0:00:34

0:00:34

0:34:10

0:34:10

0:00:28

0:00:28

0:00:34

0:00:34

0:18:48

0:18:48

0:17:35

0:17:35

0:05:49

0:05:49

0:01:30

0:01:30

0:00:28

0:00:28

0:20:08

0:20:08

0:14:01

0:14:01

0:11:00

0:11:00

0:05:12

0:05:12

0:03:42

0:03:42

0:06:39

0:06:39

0:00:47

0:00:47

0:17:36

0:17:36

0:00:31

0:00:31

0:28:23

0:28:23