filmov

tv

The fastest matrix multiplication algorithm

Показать описание

0:00 Multiplying Matrices the standard way

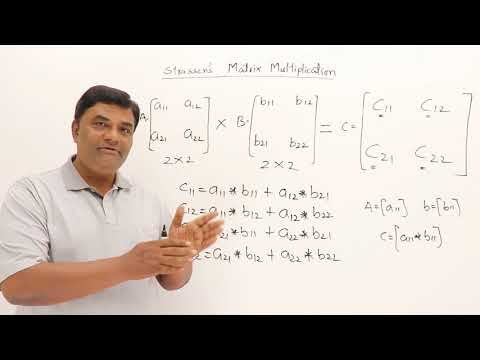

2:05 The Strassen Method for 2x2 Matrices

3:52 Large matrices via induction

7:25 The history and the future

In this video we explore how to multiply very large matrices as computationally efficiently as possible. Then standard algorithm from linear algebra results in n^3 multiplications to multiply nxn matrices. But can we do better? The Strassen algorithm improved this to about n^2.8, first in the 2x2 case and then we can prove via induction it works in general. This improvement has seen a range of improvements over the last 50 years inching closer - but still far away - to the theoretically limit of n^2.

Further Reading:

Check out my MATH MERCH line in collaboration with Beautiful Equations

COURSE PLAYLISTS:

OTHER PLAYLISTS:

► Learning Math Series

►Cool Math Series:

BECOME A MEMBER:

MATH BOOKS I LOVE (affilliate link):

SOCIALS:

The fastest matrix multiplication algorithm

How AI Discovered a Faster Matrix Multiplication Algorithm

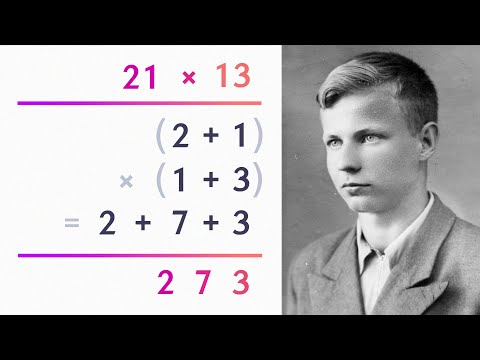

Karatsuba's Multiplication Trick Summarised in 1 Minute

Josh Alman. Algorithms and Barriers for Fast Matrix Multiplication

A Very Fast Overview of Fast Matrix Multiplication

Remember How to Multiply Matrices with Rabbits #SoME3

Adding Nested Loops Makes this Algorithm 120x FASTER?

Virginia Vassilevska-Williams - A refined laser method and slightly faster matrix multiplication

Dr. Anita Faul | A Sparse, Flexible Framework with Confidence Measure

5.4.2Animation of High Performance Matrix-Matrix Multiplication

2.9 Strassens Matrix Multiplication

The Fastest Multiplication Algorithm

Fast matrix multiply

Strassen algorithm for matrix multiplication (divide and conquer) - Inside code

AlphaTensor by DEEPMIND finds new Algorithms for Matrix Multiplication

Discovering faster matrix multiplication algorithms with reinforcement learning (Hussein Fawzi)

Matej Balog - AlphaTensor: Discover faster matrix multiplication algorithms with RL - IPAM at UCLA

Strassen’s Matrix Multiplication | Divide and Conquer | GeeksforGeeks

Session 10-2 Beyond Fast Matrix Multiplication Algorithms and Hardness

This is a game changer! (AlphaTensor by DeepMind explained)

This Algorithm is 1,606,240% FASTER

Square & Multiply Algorithm - Computerphile

Matrix Multiplication

Fast Multiplication Trick | Interesting math tricks #maths #shorts

Комментарии

0:11:28

0:11:28

0:13:00

0:13:00

0:00:58

0:00:58

0:52:02

0:52:02

0:19:36

0:19:36

0:00:47

0:00:47

0:15:41

0:15:41

1:08:06

1:08:06

0:48:17

0:48:17

0:02:57

0:02:57

0:23:40

0:23:40

0:13:58

0:13:58

0:15:08

0:15:08

0:09:41

0:09:41

0:04:21

0:04:21

0:57:33

0:57:33

0:53:12

0:53:12

0:04:37

0:04:37

0:16:52

0:16:52

0:55:07

0:55:07

0:13:31

0:13:31

0:17:35

0:17:35

0:00:20

0:00:20

0:00:28

0:00:28