filmov

tv

OpenAI CLIP Explained | Multi-modal ML

Показать описание

OpenAI's CLIP explained simply and intuitively with visuals and code. Language models (LMs) can not rely on language alone. That is the idea behind the "Experience Grounds Language" paper, that proposes a framework to measure LMs' current and future progress. A key idea is that, beyond a certain threshold LMs need other forms of data, such as visual input.

The next step beyond well-known language models; BERT, GPT-3, and T5 is "World Scope 3". In World Scope 3, we move from large text-only datasets to large multi-modal datasets. That is, datasets containing information from multiple forms of media, like *both* images and text.

The world, both digital and real, is multi-modal. We perceive the world as an orchestra of language, imagery, video, smell, touch, and more. This chaotic ensemble produces an inner state, our "model" of the outside world.

AI must move in the same direction. Even specialist models that focus on language or vision must, at some point, have input from the other modalities. How can a model fully understand the concept of the word "person" without *seeing* a person?

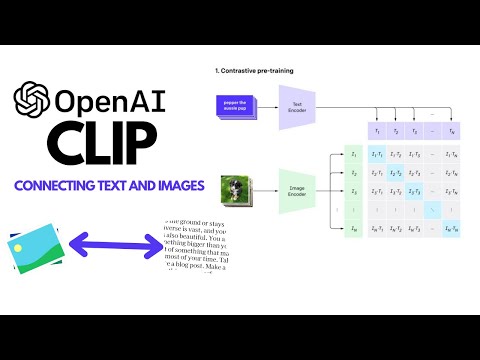

OpenAI's Contrastive Learning In Pretraining (CLIP) is a world scope three model. It can comprehend concepts in both text and image and even connect concepts between the two modalities. In this video we will learn about multi-modality, how CLIP works, and how to use CLIP for different use cases like encoding, classification, and object detection.

🌲 Pinecone article:

🤖 70% Discount on the NLP With Transformers in Python course:

🎉 Subscribe for Article and Video Updates!

👾 Discord:

The next step beyond well-known language models; BERT, GPT-3, and T5 is "World Scope 3". In World Scope 3, we move from large text-only datasets to large multi-modal datasets. That is, datasets containing information from multiple forms of media, like *both* images and text.

The world, both digital and real, is multi-modal. We perceive the world as an orchestra of language, imagery, video, smell, touch, and more. This chaotic ensemble produces an inner state, our "model" of the outside world.

AI must move in the same direction. Even specialist models that focus on language or vision must, at some point, have input from the other modalities. How can a model fully understand the concept of the word "person" without *seeing* a person?

OpenAI's Contrastive Learning In Pretraining (CLIP) is a world scope three model. It can comprehend concepts in both text and image and even connect concepts between the two modalities. In this video we will learn about multi-modality, how CLIP works, and how to use CLIP for different use cases like encoding, classification, and object detection.

🌲 Pinecone article:

🤖 70% Discount on the NLP With Transformers in Python course:

🎉 Subscribe for Article and Video Updates!

👾 Discord:

Комментарии

0:33:33

0:33:33

0:48:07

0:48:07

0:22:54

0:22:54

0:14:48

0:14:48

0:09:25

0:09:25

0:35:19

0:35:19

0:06:44

0:06:44

0:32:00

0:32:00

0:59:27

0:59:27

0:29:32

0:29:32

0:21:43

0:21:43

0:31:40

0:31:40

0:58:41

0:58:41

0:03:16

0:03:16

0:51:30

0:51:30

1:30:40

1:30:40

0:22:03

0:22:03

0:01:23

0:01:23

0:11:58

0:11:58

0:07:51

0:07:51

1:26:56

1:26:56

0:53:09

0:53:09

0:08:28

0:08:28

0:10:02

0:10:02