filmov

tv

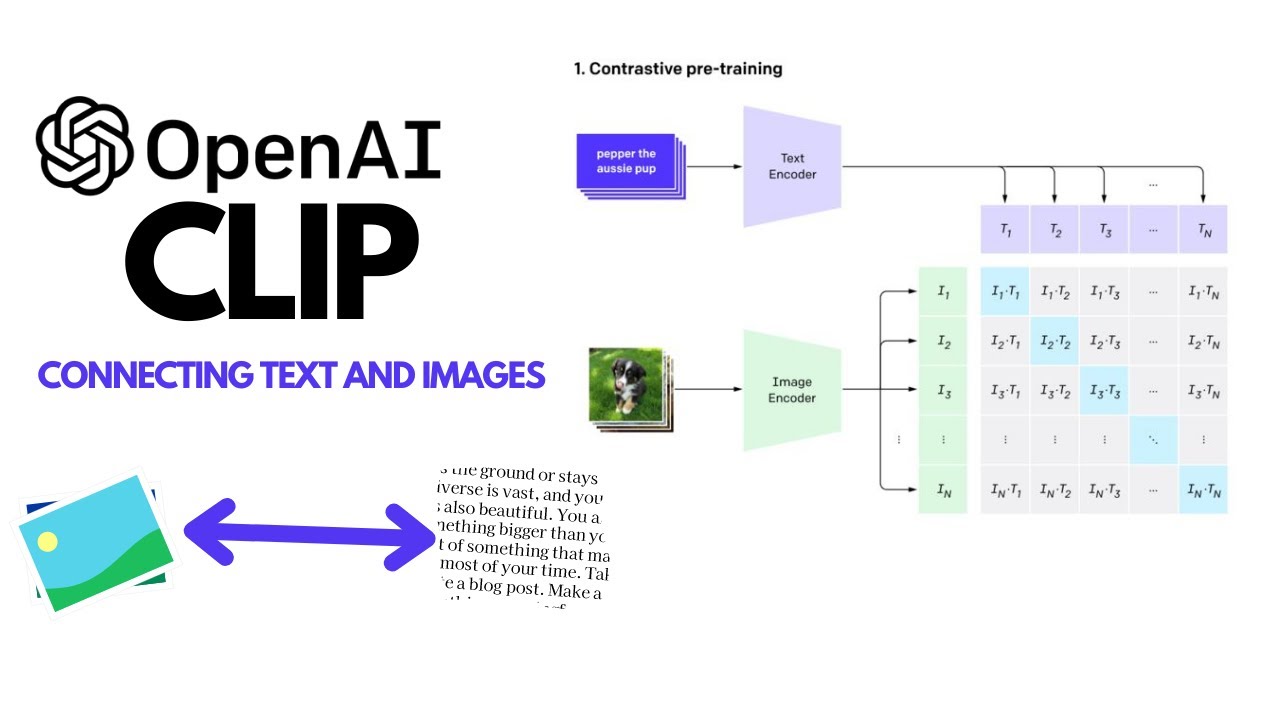

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning

Показать описание

CLIP (Contrastive Language-Image Pre-Training) is a neural network trained on a variety of (image, text) pairs. It can be instructed in natural language to predict the most relevant text snippet, given an image, without directly optimizing for the task, similarly to the zero-shot capabilities of GPT-2 and 3. We found CLIP matches the performance of the original ResNet50 on ImageNet “zero-shot” without using any of the original 1.28M labeled examples, overcoming several major challenges in computer vision.

✅Recommended Gaming Laptops For Machine Learning and Deep Learning :

✅ Best Work From Home utilities to Purchase for Data Scientist :

✅ Recommended Books to Read on Machine Learning And Deep Learning:

Connect with me on :

#datascience #nlp #deeplearning #ecommerce

✅Recommended Gaming Laptops For Machine Learning and Deep Learning :

✅ Best Work From Home utilities to Purchase for Data Scientist :

✅ Recommended Books to Read on Machine Learning And Deep Learning:

Connect with me on :

#datascience #nlp #deeplearning #ecommerce

OpenAI CLIP: ConnectingText and Images (Paper Explained)

OpenAI’s CLIP explained! | Examples, links to code and pretrained model

OpenAI CLIP Explained | Multi-modal ML

Fast intro to multi-modal ML with OpenAI's CLIP

CLIP: Connecting Text and Images

Fast Zero Shot Object Detection with OpenAI CLIP

How ChatGPT Works Technically | ChatGPT Architecture

How to Implement CLIP AI: A Step-by-Step Tutorial for Beginners

HUAWEI eKit App MiniFTTO How to Implement Deployment Without Internet

OpenAI Embeddings and Vector Databases Crash Course

What is ChatGPT? OpenAI's Chat GPT Explained

Testing Stable Diffusion inpainting on video footage #shorts

OpenAI's DALL-E 2 is unbelievable!

How AI Image Generators Work (Stable Diffusion / Dall-E) - Computerphile

Vector databases are so hot right now. WTF are they?

How to Build AI-Powered Apps with OpenAI & ChatGPT (That Don’t S*ck)

Domain-Specific Multi-Modal Machine Learning with CLIP

DALL·E 2 Explained - model architecture, results and comparison

Zero-Shot Image Classification with Open AI's CLIP Model - GPT-3 for Images

Don't use ChatGPT until you've watched this video!

PyTorch in 100 Seconds

Open AI Glide: Text-to image Generation Explained with code| Python|+91-9872993883 for query|

Introduction to Generative AI

How AIs, like ChatGPT, Learn

Комментарии

0:48:07

0:48:07

0:14:48

0:14:48

0:33:33

0:33:33

0:22:54

0:22:54

0:09:25

0:09:25

0:29:32

0:29:32

0:07:54

0:07:54

0:12:36

0:12:36

0:02:24

0:02:24

0:18:41

0:18:41

0:09:17

0:09:17

0:00:16

0:00:16

0:00:56

0:00:56

0:17:50

0:17:50

0:03:22

0:03:22

0:14:18

0:14:18

0:59:27

0:59:27

0:11:04

0:11:04

0:20:44

0:20:44

0:01:00

0:01:00

0:02:43

0:02:43

0:07:56

0:07:56

0:22:08

0:22:08

0:08:55

0:08:55