filmov

tv

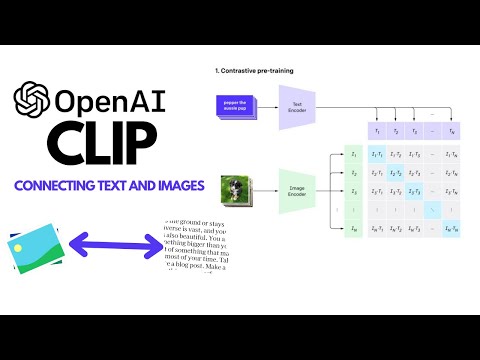

CLIP: Connecting Text and Images

Показать описание

This video explains how CLIP from OpenAI transforms Image Classification into a Text-Image similarity matching task. This is done with Contrastive Training and Zero-Shot Pattern-Exploiting Training. Thanks for watching!

Paper Links:

Thanks for watching! Please Subscribe!

Paper Links:

Thanks for watching! Please Subscribe!

CLIP: Connecting text and images

OpenAI CLIP: ConnectingText and Images (Paper Explained)

CLIP: Connecting Text and Images

OpenAI CLIP: Connecting Text and Images

CLIP: Connecting Text and Images

Ariel Ekgren: CLIP: Connecting text and images

CLIP: Connecting Text and Images (Swedish NLP Webinars)

Multilingual CLIP - Connecting images and texts in 100 languages

OpenAI’s CLIP explained! | Examples, links to code and pretrained model

OpenAI's CLIP Explained and Implementation | Contrastive Learning | Self-Supervised Learning

Fast intro to multi-modal ML with OpenAI's CLIP

Introducing CLIP OpenAI's AI Model Connecting Images and Text

Searching Across Images and Text: Intro to OpenAI’s CLIP

CLIP - Paper explanation (training and inference)

OpenAI's CLIP for Zero Shot Image Classification

CLIP, T-SNE, and UMAP - Master Image Embeddings & Vector Analysis

CLIP model

Connecting Images to Text: CLIP and DALL-E | NLP Journal Club

What CLIP models are (Contrastive Language-Image Pre-training)

How to Implement CLIP AI: A Step-by-Step Tutorial for Beginners

Contrastive Language-Image Pre-training (CLIP)

DigitalFUTURES Tutorial: Creative AI Text to Image with VQGAN+CLIP

CLIP: OpenAI's amazing new zero-shot image classifier

How does CLIP Text-to-image generation work?

Комментарии

0:04:51

0:04:51

0:48:07

0:48:07

0:09:25

0:09:25

0:31:40

0:31:40

0:20:50

0:20:50

0:58:04

0:58:04

0:44:37

0:44:37

0:03:00

0:03:00

0:14:48

0:14:48

0:32:00

0:32:00

0:22:54

0:22:54

0:02:28

0:02:28

1:26:56

1:26:56

0:14:01

0:14:01

0:21:43

0:21:43

0:20:52

0:20:52

0:38:18

0:38:18

0:07:39

0:07:39

0:06:35

0:06:35

0:12:36

0:12:36

1:13:22

1:13:22

2:03:00

2:03:00

0:08:29

0:08:29

0:23:47

0:23:47