filmov

tv

Deep Learning Lecture 3: Maximum likelihood and information

Показать описание

Course taught in 2015 at the University of Oxford by Nando de Freitas with great help from Brendan Shillingford.

Deep Learning Lecture 3: Maximum likelihood and information

Lesson 3: Practical Deep Learning for Coders 2022

Lecture 3: Linear Classifiers

Lecture 3 'k-nearest neighbors' -Cornell CS4780 SP17

But what is a neural network? | Chapter 1, Deep learning

Lecture 3: Dummy Q-learning (table)

Lecture 3: Recurrent Neural Networks (Full Stack Deep Learning - Spring 2021)

MIT Deep Learning Genomics - Lecture 3 - Convolutional Neural Networks CNNs (Spring 2020)

Lecture 13 | Backpropagation II | CMPS 497 Deep Learning | Fall 2024

Deep Learning Lecture 8: Modular back-propagation, logistic regression and Torch

Deep Learning Lecture 11: Max-margin learning, transfer and memory networks

10-601 Machine Learning Spring 2015 - Lecture 3

Lesson 3: Practical Deep Learning for Coders

Machine Learning for Physicists (Lecture 3): Training networks, Keras, Image recognition

DeepMind x UCL | Deep Learning Lectures | 3/12 | Convolutional Neural Networks for Image Recognition

Deep RL Bootcamp Lecture 3: Deep Q-Networks

RL Course by David Silver - Lecture 3: Planning by Dynamic Programming

Lecture 3 | Learning, Empirical Risk Minimization, and Optimization

Salsa Night in IIT Bombay #shorts #salsa #dance #iit #iitbombay #motivation #trending #viral #jee

Biggest mistake I do while recording| behind the scene | #jennyslectures

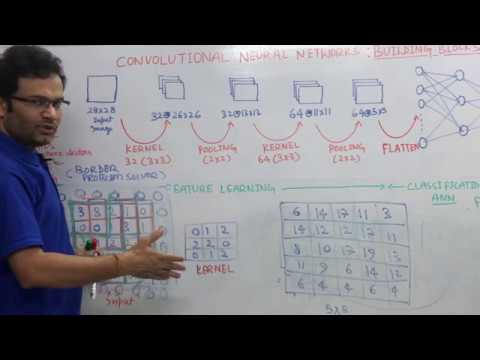

Convolutional Neural Networks | CNN | Kernel | Stride | Padding | Pooling | Flatten | Formula

Support Vector Machine (SVM) in 2 minutes

MIT 6.S191 (2023): Convolutional Neural Networks

IIT Bombay Lecture Hall | IIT Bombay Motivation | #shorts #ytshorts #iit

Комментарии

1:12:59

1:12:59

1:30:25

1:30:25

1:02:06

1:02:06

0:49:42

0:49:42

0:18:40

0:18:40

0:20:49

0:20:49

1:06:11

1:06:11

1:20:49

1:20:49

1:06:52

1:06:52

0:52:56

0:52:56

0:58:50

0:58:50

1:20:51

1:20:51

2:03:18

2:03:18

1:30:14

1:30:14

1:20:19

1:20:19

1:03:07

1:03:07

1:39:09

1:39:09

1:18:43

1:18:43

0:00:14

0:00:14

0:00:15

0:00:15

0:21:32

0:21:32

0:02:19

0:02:19

0:55:15

0:55:15

0:00:12

0:00:12