filmov

tv

MIT Deep Learning Genomics - Lecture 3 - Convolutional Neural Networks CNNs (Spring 2020)

Показать описание

MIT 6.874 Lecture 3. Spring 2020

Slides credit: 6.S191 (Alexander Amini, Ava Soleimany), Dana Erlich, ParamVirSingh, David Gifford, Manolis Kellis

1. Scene understanding and object recognition for machines (and humans)

– Scene/object recognition challenge. Illusions reveal primitives, conflicting info

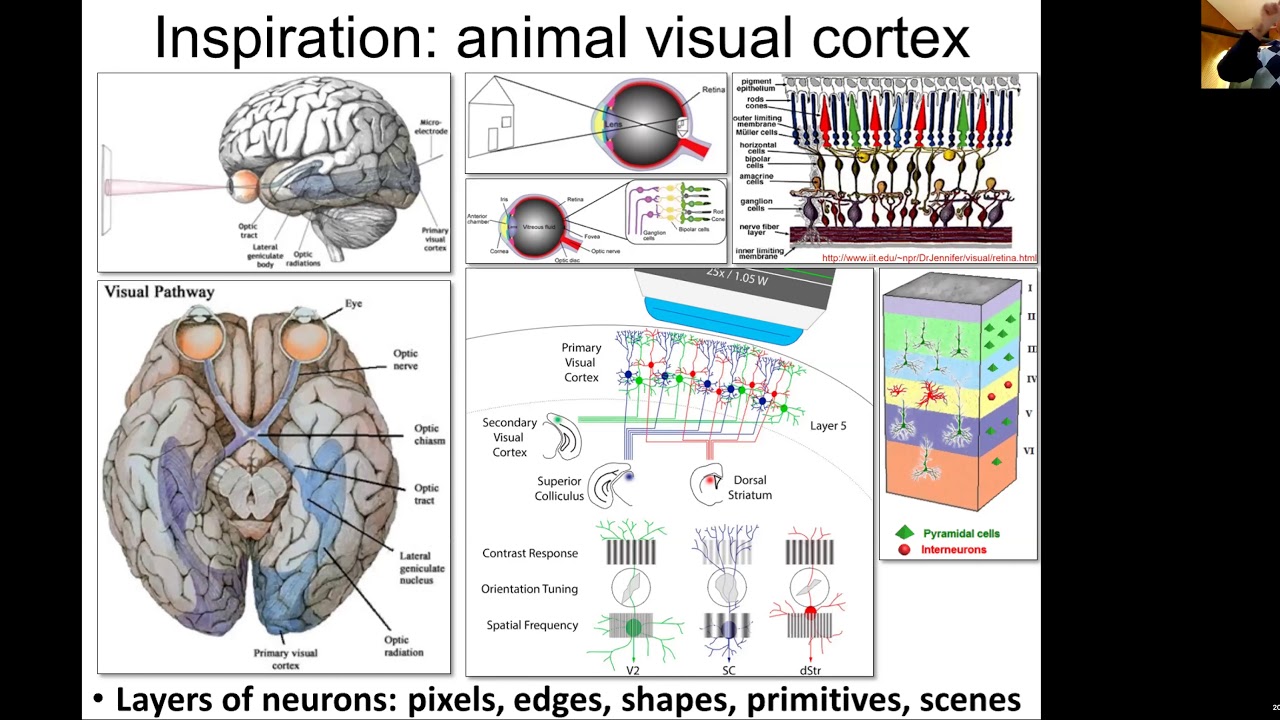

– Human neurons/circuits. Visual cortex layers==abstraction. General cognition

2. Classical machine vision foundations: features, scenes, filters, convolution

– Spatial structure primitives: edge detectors & other filters, feature recognition

– Convolution: basics, padding, stride, object recognition, architectures

3. CNN foundations: LeNet, de novo feature learning, parameter sharing

– Key ideas: learn features, hierarchy, re-use parameters, back-prop filter learning

– CNN formalization: representations(Conv+ReLU+Pool)*N layers + Fully-connected

4. Modern CNN architectures: millions of parameters, dozens of layers

– Feature invariance is hard: apply perturbations, learn for each variation

– ImageNet progression of best performers

– AlexNet: First top performer CNN, 60M parameters (from 60k in LeNet-5), ReLU

– VGGNet: simpler but deeper (8 to 19 layers), 140M parameters, ensembles

– GoogleNet: new primitive=inception module, 5M params, no FC, efficiency

– ResNet: 152 layers, vanishing gradients: fit residuals to enable learning

5. Countless applications: General architecture, enormous power

– Semantic segmentation, facial detection/recognition, self-driving, image colorization, optimizing pictures/scenes, up-scaling, medicine, biology, genomicse

Slides credit: 6.S191 (Alexander Amini, Ava Soleimany), Dana Erlich, ParamVirSingh, David Gifford, Manolis Kellis

1. Scene understanding and object recognition for machines (and humans)

– Scene/object recognition challenge. Illusions reveal primitives, conflicting info

– Human neurons/circuits. Visual cortex layers==abstraction. General cognition

2. Classical machine vision foundations: features, scenes, filters, convolution

– Spatial structure primitives: edge detectors & other filters, feature recognition

– Convolution: basics, padding, stride, object recognition, architectures

3. CNN foundations: LeNet, de novo feature learning, parameter sharing

– Key ideas: learn features, hierarchy, re-use parameters, back-prop filter learning

– CNN formalization: representations(Conv+ReLU+Pool)*N layers + Fully-connected

4. Modern CNN architectures: millions of parameters, dozens of layers

– Feature invariance is hard: apply perturbations, learn for each variation

– ImageNet progression of best performers

– AlexNet: First top performer CNN, 60M parameters (from 60k in LeNet-5), ReLU

– VGGNet: simpler but deeper (8 to 19 layers), 140M parameters, ensembles

– GoogleNet: new primitive=inception module, 5M params, no FC, efficiency

– ResNet: 152 layers, vanishing gradients: fit residuals to enable learning

5. Countless applications: General architecture, enormous power

– Semantic segmentation, facial detection/recognition, self-driving, image colorization, optimizing pictures/scenes, up-scaling, medicine, biology, genomicse

Комментарии

0:54:42

0:54:42

1:24:09

1:24:09

0:12:41

0:12:41

1:05:05

1:05:05

1:20:49

1:20:49

1:20:22

1:20:22

1:26:42

1:26:42

1:20:02

1:20:02

1:21:31

1:21:31

1:07:58

1:07:58

1:05:26

1:05:26

1:43:55

1:43:55

1:13:57

1:13:57

1:20:49

1:20:49

1:19:46

1:19:46

1:18:36

1:18:36

0:37:43

0:37:43

1:27:43

1:27:43

0:01:11

0:01:11

0:52:42

0:52:42

0:18:40

0:18:40

1:24:01

1:24:01

1:01:42

1:01:42

0:48:07

0:48:07