filmov

tv

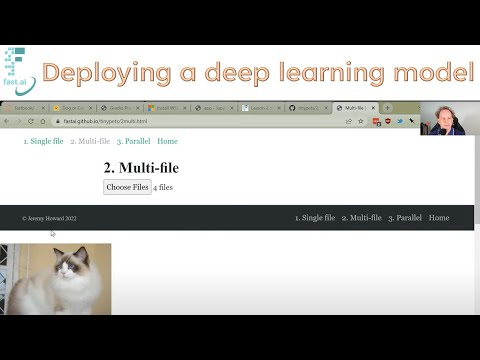

Lesson 3: Practical Deep Learning for Coders 2022

Показать описание

00:00 Introduction and survey

02:25 How to do a fastai lesson

04:28 How to not self-study

05:28 Highest voted student work

07:56 Pets breeds detector

08:52 Paperspace

10:16 JupyterLab

12:11 Make a better pet detector

13:47 Comparison of all (image) models

15:49 Try out new models

19:22 Get the categories of a model

20:40 What’s in the model

21:23 What does model architecture look like

22:15 Parameters of a model

23:36 Create a general quadratic function

27:20 Fit a function by good hands and eyes

30:58 Loss functions

33:39 Automate the search of parameters for better loss

42:45 The mathematical functions

43:18 ReLu: Rectified linear function

45:17 Infinitely complex function

49:21 A chart of all image models compared

52:11 Do I have enough data?

54:56 Interpret gradients in unit?

56:23 Learning rate

1:00:14 Matrix multiplication

1:04:22 Build a regression model in spreadsheet

1:16:18 Build a neuralnet by adding two regression models

1:18:31 Matrix multiplication makes training faster

1:21:01 Watch out! it’s chapter 4

1:22:31 Create dummy variables of 3 classes

1:23:34 Taste NLP

1:27:29 fastai NLP library vs Hugging Face library

1:28:54 Homework to prepare you for the next lesson

02:25 How to do a fastai lesson

04:28 How to not self-study

05:28 Highest voted student work

07:56 Pets breeds detector

08:52 Paperspace

10:16 JupyterLab

12:11 Make a better pet detector

13:47 Comparison of all (image) models

15:49 Try out new models

19:22 Get the categories of a model

20:40 What’s in the model

21:23 What does model architecture look like

22:15 Parameters of a model

23:36 Create a general quadratic function

27:20 Fit a function by good hands and eyes

30:58 Loss functions

33:39 Automate the search of parameters for better loss

42:45 The mathematical functions

43:18 ReLu: Rectified linear function

45:17 Infinitely complex function

49:21 A chart of all image models compared

52:11 Do I have enough data?

54:56 Interpret gradients in unit?

56:23 Learning rate

1:00:14 Matrix multiplication

1:04:22 Build a regression model in spreadsheet

1:16:18 Build a neuralnet by adding two regression models

1:18:31 Matrix multiplication makes training faster

1:21:01 Watch out! it’s chapter 4

1:22:31 Create dummy variables of 3 classes

1:23:34 Taste NLP

1:27:29 fastai NLP library vs Hugging Face library

1:28:54 Homework to prepare you for the next lesson

Комментарии

1:30:25

1:30:25

2:03:18

2:03:18

6:20:30

6:20:30

2:03:18

2:03:18

2:06:23

2:06:23

11:12:32

11:12:32

1:34:37

1:34:37

2:16:38

2:16:38

0:10:12

0:10:12

4:24:01

4:24:01

1:23:43

1:23:43

0:18:40

0:18:40

1:44:08

1:44:08

0:55:31

0:55:31

0:05:45

0:05:45

1:16:42

1:16:42

0:06:44

0:06:44

2:16:38

2:16:38

1:42:49

1:42:49

1:22:56

1:22:56

0:05:52

0:05:52

1:01:19

1:01:19

1:36:09

1:36:09

2:15:16

2:15:16