filmov

tv

Extract Table Info From PDF & Summarise It Using Llama3 via Ollama | LangChain

Показать описание

In this 2nd video in the unstructured playlist, I will explain you how to extract table data from PDF and use that to summarise the table content using Llama3 model via Ollama. Also as a bonus, I will demonstrate how to convert the data into pandas df for further exploration if needed. Enjoy 😎

80% of enterprise data exists in difficult-to-use formats like HTML, PDF, CSV, PNG, PPTX, and more. Unstructured effortlessly extracts and transforms complex data for use with every major vector database and LLM framework.

Link ⛓️💥

Code 👨🏻💻

------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------

🤝 Connect with me:

#unstructureddata #llama3 #langchain #ollama #unstructuredio #llm #datasciencebasics

80% of enterprise data exists in difficult-to-use formats like HTML, PDF, CSV, PNG, PPTX, and more. Unstructured effortlessly extracts and transforms complex data for use with every major vector database and LLM framework.

Link ⛓️💥

Code 👨🏻💻

------------------------------------------------------------------------------------------

------------------------------------------------------------------------------------------

🤝 Connect with me:

#unstructureddata #llama3 #langchain #ollama #unstructuredio #llm #datasciencebasics

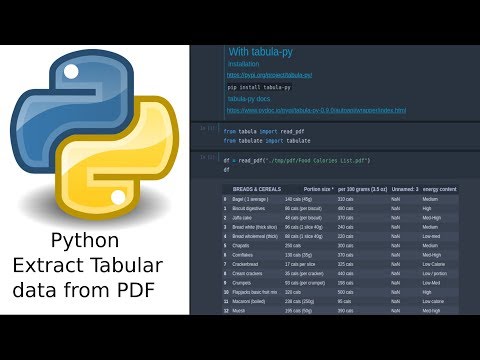

How to Extract Tables from PDF using Python

Extract Tables from PDFs

Extract text, links, images, tables from Pdf with Python | PyMuPDF, PyPdf, PdfPlumber tutorial

Extract tabular data from PDF with Python - Tabula, Camelot, PyPDF2

Best Way to Extract Tables from PDF with LLMs

Extract All the Tables From PDF in 3 minutes With Python

How to Extract Table Data from PDF to Excel

Extract Specific Data from PDF to Excel

Extract Data from PDFs to Airtable using OpenAI

Extract Data from PDFs Easily & Quickly (table form/image/text/pages)

Extract PDF Content with Python

Microsoft AI Builder Tutorial - Extract Data from PDF

PDF Extractor SDK - C# - Extract Table Structure

Extract Table Info From PDF & Summarise It Using Llama3 via Ollama | LangChain

How to 'automatically' extract data from a messy PDF table to Excel

How to Extract Tables/Charts from a PDF file in any Computer ?

Use List of PDF Table Info to extract PDF table and column name into EXCEL - Power Automate Desktop

How to Extract Data From Unlimited PDF Forms To An Excel Table IN ONE CLICK

Extract and Visualize Data from PDF Tables with PDFplumber in Python

Properly Convert PDF to Excel

How to Extract Tables from PDF

How to Convert PDF to Excel

extract information from pdf table using langchain & gpt-4o |Tutorial:93

How to Extract Data from PDF with Power Automate

Комментарии

0:14:07

0:14:07

0:08:21

0:08:21

0:17:00

0:17:00

0:10:41

0:10:41

0:08:06

0:08:06

0:03:40

0:03:40

0:00:38

0:00:38

0:04:30

0:04:30

0:07:50

0:07:50

0:07:25

0:07:25

0:13:15

0:13:15

0:09:40

0:09:40

0:00:16

0:00:16

0:18:28

0:18:28

0:09:40

0:09:40

0:07:14

0:07:14

0:07:05

0:07:05

0:43:41

0:43:41

0:39:17

0:39:17

0:11:28

0:11:28

0:01:24

0:01:24

0:05:17

0:05:17

0:13:41

0:13:41

0:29:30

0:29:30