filmov

tv

Nonlinear Least Squares

Показать описание

Finding the line of best fit using the Nonlinear Least Squares method.

Covers a general function, derivation through Taylor Series.

Covers a general function, derivation through Taylor Series.

Nonlinear Least Squares

Linear Least Squares to Solve Nonlinear Problems

Harvard AM205 video 1.8 - Nonlinear least squares

Gauss Newton - Non Linear Least Squares

What is Least Squares?

CMPSC/Math 451. March 27, 2015. Nonlinear Least Squares Method. Wen Shen

Nonlinear regression - the basics

Learning to Solve Nonlinear Least Squares for Dense Tracking and Mapping

Least Squares - 5 Minutes with Cyrill

Nonlinear Least Squares Estimation

Python Nonlinear Least square|Non linear regression models| Parameter Estimation

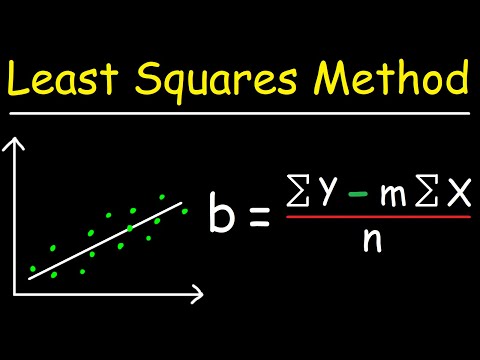

Linear Regression Using Least Squares Method - Line of Best Fit Equation

Nonlinear Least Square(Part 1)

FNC 4.7: Nonlinear least squares

Nonlinear Least Squares (MATLAB lsqnonlin)

Linear vs. non-linear least squares - Arrhenius

Gradient descent method for nonlinear least squares

Nonlinear Least Squares

An Optimization Package for Constrained Nonlinear Least-Squares | Pierre Borie | JuliaCon 2023

9. Four Ways to Solve Least Squares Problems

Nonlinear least squares geometry

MSE101 L7.2 Non-linear least squares minimisation

ch8 4. Nonlinear Least Squares Method. Wen Shen

Newton's method for nonlinear least squares

Комментарии

0:10:56

0:10:56

0:12:27

0:12:27

0:27:24

0:27:24

0:20:02

0:20:02

0:02:43

0:02:43

0:49:46

0:49:46

0:19:15

0:19:15

0:03:12

0:03:12

0:05:18

0:05:18

0:03:50

0:03:50

0:06:40

0:06:40

0:15:05

0:15:05

0:20:45

0:20:45

0:10:50

0:10:50

0:21:19

0:21:19

0:14:28

0:14:28

0:15:33

0:15:33

0:52:19

0:52:19

0:09:00

0:09:00

0:49:51

0:49:51

0:04:16

0:04:16

0:10:43

0:10:43

0:09:42

0:09:42

0:20:19

0:20:19