filmov

tv

Attention for Neural Networks, Clearly Explained!!!

Показать описание

Attention is one of the most important concepts behind Transformers and Large Language Models, like ChatGPT. However, it's not that complicated. In this StatQuest, we add Attention to a basic Sequence-to-Sequence (Seq2Seq or Encoder-Decoder) model and walk through how it works and is calculated, one step at a time. BAM!!!

If you'd like to support StatQuest, please consider...

...or...

...buying my book, a study guide, a t-shirt or hoodie, or a song from the StatQuest store...

...or just donating to StatQuest!

Lastly, if you want to keep up with me as I research and create new StatQuests, follow me on twitter:

0:00 Awesome song and introduction

3:14 The Main Idea of Attention

5:34 A worked out example of Attention

10:18 The Dot Product Similarity

11:52 Using similarity scores to calculate Attention values

13:27 Using Attention values to predict an output word

14:22 Summary of Attention

#StatQuest #neuralnetwork #attention

If you'd like to support StatQuest, please consider...

...or...

...buying my book, a study guide, a t-shirt or hoodie, or a song from the StatQuest store...

...or just donating to StatQuest!

Lastly, if you want to keep up with me as I research and create new StatQuests, follow me on twitter:

0:00 Awesome song and introduction

3:14 The Main Idea of Attention

5:34 A worked out example of Attention

10:18 The Dot Product Similarity

11:52 Using similarity scores to calculate Attention values

13:27 Using Attention values to predict an output word

14:22 Summary of Attention

#StatQuest #neuralnetwork #attention

Attention for Neural Networks, Clearly Explained!!!

Attention mechanism: Overview

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Attention Mechanism In a nutshell

Sequence-to-Sequence (seq2seq) Encoder-Decoder Neural Networks, Clearly Explained!!!

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Transformers, explained: Understand the model behind GPT, BERT, and T5

MIT 6.S191 (2023): Recurrent Neural Networks, Transformers, and Attention

[CS316] Lecture 3: From Machine Learning to Deep Learning

Self Attention in Transformer Neural Networks (with Code!)

Why masked Self Attention in the Decoder but not the Encoder in Transformer Neural Network?

Illustrated Guide to Transformers Neural Network: A step by step explanation

Cross Attention vs Self Attention

Tensors for Neural Networks, Clearly Explained!!!

Why Transformer over Recurrent Neural Networks

The Neuroscience of “Attention”

What is Attention in Neural Networks

Why Sine & Cosine for Transformer Neural Networks

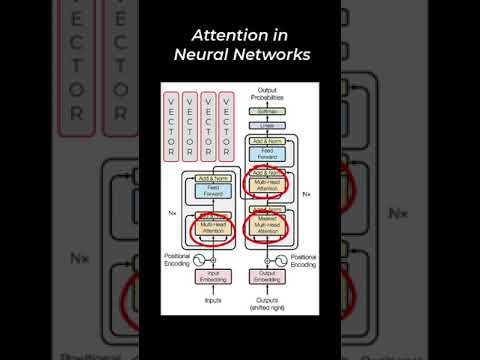

Attention in Neural Networks

What is Mutli-Head Attention in Transformer Neural Networks?

Neural Attention - This simple example will change how you think about it

Visual Guide to Transformer Neural Networks - (Episode 2) Multi-Head & Self-Attention

Query, Key and Value vectors in Transformer Neural Networks

Self-attention in deep learning (transformers) - Part 1

Комментарии

0:15:51

0:15:51

0:05:34

0:05:34

0:36:15

0:36:15

0:04:30

0:04:30

0:16:50

0:16:50

0:58:04

0:58:04

0:09:11

0:09:11

1:02:50

1:02:50

![[CS316] Lecture 3:](https://i.ytimg.com/vi/V1nBUGqazZI/hqdefault.jpg) 0:39:41

0:39:41

0:15:02

0:15:02

0:00:45

0:00:45

0:15:01

0:15:01

0:00:45

0:00:45

0:09:40

0:09:40

0:01:00

0:01:00

0:17:48

0:17:48

0:00:40

0:00:40

0:00:51

0:00:51

0:03:56

0:03:56

0:00:33

0:00:33

0:18:06

0:18:06

0:15:25

0:15:25

0:01:00

0:01:00

0:04:44

0:04:44