filmov

tv

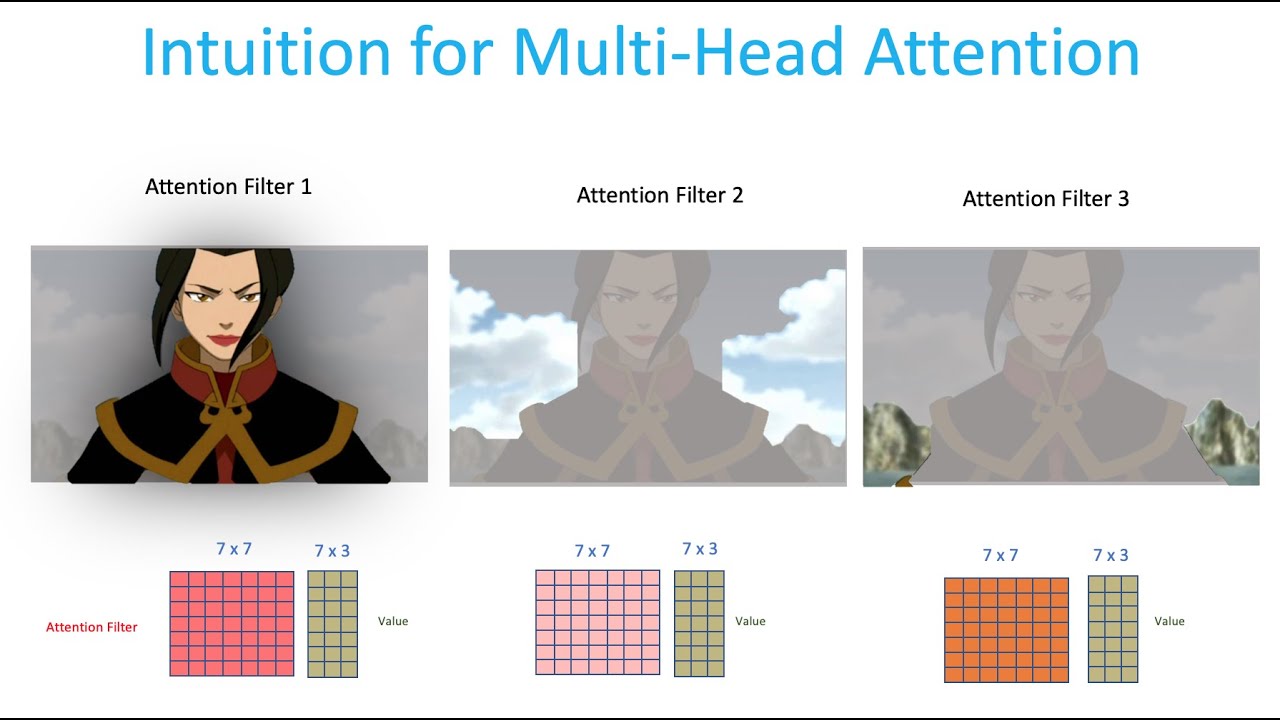

Visual Guide to Transformer Neural Networks - (Episode 2) Multi-Head & Self-Attention

Показать описание

Visual Guide to Transformer Neural Networks (Series) - Step by Step Intuitive Explanation

Episode 0 - [OPTIONAL] The Neuroscience of "Attention"

Episode 1 - Position Embeddings

Episode 2 - Multi-Head & Self-Attention

Episode 3 - Decoder’s Masked Attention

This video series explains the math, as well as the intuition behind the Transformer Neural Networks that were first introduced by the “Attention is All You Need” paper.

--------------------------------------------------------------

References and Other Great Resources

--------------------------------------------------------------

Attention is All You Need

Jay Alammar – The Illustrated Transformer

The A.I Hacker – Illustrated Guide to Transformers Neural Networks: A step by step explanation

Amirhoussein Kazemnejad Blog Post - Transformer Architecture: The Positional Encoding

Yannic Kilcher Youtube Video – Attention is All You Need

Episode 0 - [OPTIONAL] The Neuroscience of "Attention"

Episode 1 - Position Embeddings

Episode 2 - Multi-Head & Self-Attention

Episode 3 - Decoder’s Masked Attention

This video series explains the math, as well as the intuition behind the Transformer Neural Networks that were first introduced by the “Attention is All You Need” paper.

--------------------------------------------------------------

References and Other Great Resources

--------------------------------------------------------------

Attention is All You Need

Jay Alammar – The Illustrated Transformer

The A.I Hacker – Illustrated Guide to Transformers Neural Networks: A step by step explanation

Amirhoussein Kazemnejad Blog Post - Transformer Architecture: The Positional Encoding

Yannic Kilcher Youtube Video – Attention is All You Need

Visual Guide to Transformer Neural Networks - (Episode 1) Position Embeddings

Visual Guide to Transformer Neural Networks - (Episode 2) Multi-Head & Self-Attention

Illustrated Guide to Transformers Neural Network: A step by step explanation

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Visual Guide to Transformer Neural Networks - (Episode 3) Decoder’s Masked Attention

Vision Transformer Quick Guide - Theory and Code in (almost) 15 min

Visualize the Transformers Multi-Head Attention in Action

The complete guide to Transformer neural Networks!

Transformers, explained: Understand the model behind GPT, BERT, and T5

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Attention mechanism: Overview

Let's build GPT: from scratch, in code, spelled out.

Live -Transformers Indepth Architecture Understanding- Attention Is All You Need

Vision Transformer Basics

A gentle visual intro to Transformer models

What are Transformers (Machine Learning Model)?

An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale (Paper Explained)

The matrix math behind transformer neural networks, one step at a time!!!

What are Transformer Neural Networks?

Vision Transformer for Image Classification

Transformer Neural Networks - EXPLAINED! (Attention is all you need)

Attention Mechanism In a nutshell

What is backpropagation really doing? | Chapter 3, Deep learning

Testing Stable Diffusion inpainting on video footage #shorts

Комментарии

0:12:23

0:12:23

0:15:25

0:15:25

0:15:01

0:15:01

0:36:15

0:36:15

0:16:04

0:16:04

0:16:51

0:16:51

0:05:54

0:05:54

0:27:53

0:27:53

0:09:11

0:09:11

0:58:04

0:58:04

0:05:34

0:05:34

1:56:20

1:56:20

1:19:24

1:19:24

0:30:49

0:30:49

0:29:06

0:29:06

0:05:50

0:05:50

0:29:56

0:29:56

0:23:43

0:23:43

0:16:44

0:16:44

0:14:47

0:14:47

0:13:05

0:13:05

0:04:30

0:04:30

0:12:47

0:12:47

0:00:16

0:00:16