filmov

tv

Self-attention in deep learning (transformers) - Part 1

Показать описание

Self-attention in deep learning (transformers)

Self attention is very commonly used in deep learning these days. For example, it is one of the main building blocks of the Transformer paper (Attention is all you need) which is fast becoming the go to deep learning architectures for several problems both in computer vision and language processing. Additionally, all these famous papers like BERT, GPT, XLM, Performer use some variation of the transformers which in turn is built using self-attention layers as building blocks.

So this video is about understanding a simplified version of the attention mechanism in deep learning.

Note: This is part 1 in the series of videos about Transformers.

📚 📚 📚 BOOKS I HAVE READ, REFER AND RECOMMEND 📚 📚 📚

Self attention is very commonly used in deep learning these days. For example, it is one of the main building blocks of the Transformer paper (Attention is all you need) which is fast becoming the go to deep learning architectures for several problems both in computer vision and language processing. Additionally, all these famous papers like BERT, GPT, XLM, Performer use some variation of the transformers which in turn is built using self-attention layers as building blocks.

So this video is about understanding a simplified version of the attention mechanism in deep learning.

Note: This is part 1 in the series of videos about Transformers.

📚 📚 📚 BOOKS I HAVE READ, REFER AND RECOMMEND 📚 📚 📚

Self-attention in deep learning (transformers) - Part 1

Attention mechanism: Overview

Attention in transformers, visually explained | Chapter 6, Deep Learning

Transformer Neural Networks - EXPLAINED! (Attention is all you need)

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Self Attention in Transformer Neural Networks (with Code!)

Illustrated Guide to Transformers Neural Network: A step by step explanation

What are Transformers (Machine Learning Model)?

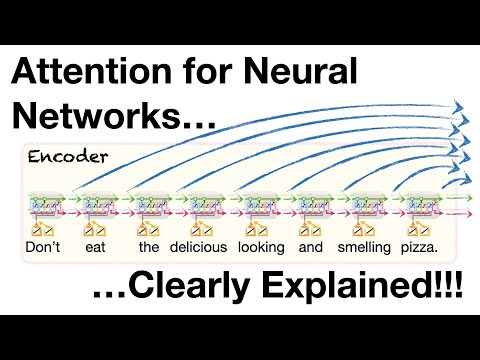

Attention for Neural Networks, Clearly Explained!!!

Stanford CS224N NLP with Deep Learning | 2023 | Lecture 8 - Self-Attention and Transformers

Transformers, explained: Understand the model behind GPT, BERT, and T5

But what is a GPT? Visual intro to transformers | Chapter 5, Deep Learning

Attention Mechanism In a nutshell

What is Self Attention in Transformer Neural Networks?

Lecture 12.1 Self-attention

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Transformers for beginners | What are they and how do they work

MIT 6.S191: Recurrent Neural Networks, Transformers, and Attention

DEEP LEARNING: TRANSFORMERS - Introduzione

EE599 Project 12: Transformer and Self-Attention mechanism

Stanford CS224N: NLP with Deep Learning | Winter 2019 | Lecture 14 – Transformers and Self-Attention...

MIT 6.S191 (2023): Recurrent Neural Networks, Transformers, and Attention

CS480/680 Lecture 19: Attention and Transformer Networks

Pytorch Transformers from Scratch (Attention is all you need)

Комментарии

0:04:44

0:04:44

0:05:34

0:05:34

0:26:10

0:26:10

0:13:05

0:13:05

0:36:15

0:36:15

0:15:02

0:15:02

0:15:01

0:15:01

0:05:50

0:05:50

0:15:51

0:15:51

1:17:04

1:17:04

0:09:11

0:09:11

0:27:14

0:27:14

0:04:30

0:04:30

0:00:44

0:00:44

0:22:30

0:22:30

0:58:04

0:58:04

0:19:59

0:19:59

1:01:31

1:01:31

0:12:23

0:12:23

0:07:35

0:07:35

0:53:48

0:53:48

1:02:50

1:02:50

1:22:38

1:22:38

0:57:10

0:57:10