filmov

tv

Why Transformer over Recurrent Neural Networks

Показать описание

#transformers #machinelearning #chatgpt #gpt #deeplearning

Why Transformer over Recurrent Neural Networks

Transformers vs Recurrent Neural Networks (RNN)!

CNNs, RNNs, LSTMs, and Transformers

Recurrent Neural Networks (RNNs), Clearly Explained!!!

MIT 6.S191: Recurrent Neural Networks, Transformers, and Attention

Transformers, explained: Understand the model behind GPT, BERT, and T5

Why Transformers over LSTMs? #deeplearning #machinelearning

What are Transformers (Machine Learning Model)?

Machine Learning MCQs Part 5 | Neural Networks | Prepare for Exams! By @professorrahuljain

MIT 6.S191 (2023): Recurrent Neural Networks, Transformers, and Attention

Recurrent Neural Networks to Sentence Transformer

Illustrated Guide to Transformers Neural Network: A step by step explanation

Attention mechanism: Overview

Feedback Transformers: Addressing Some Limitations of Transformers with Feedback Memory (Explained)

Transformers | Basics of Transformers

5 concepts in transformer neural networks (Part 1)

Retentive Network: A Successor to Transformer for Large Language Models (Paper Explained)

Attention Mechanism In a nutshell

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Why Recurrent Neural Networks are cursed | LM2

Transformers | What is attention?

Transformers | how attention relates to Transformers

Why LSTM over RNNs? #deeplearning #machinelearning

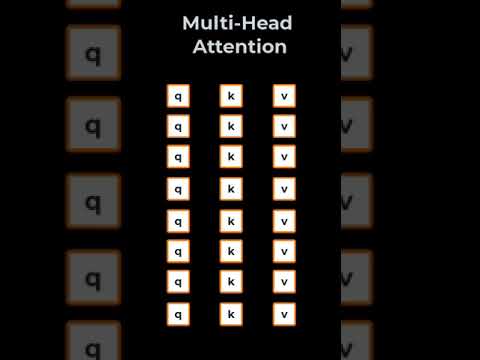

What is Mutli-Head Attention in Transformer Neural Networks?

Комментарии

0:01:00

0:01:00

0:06:28

0:06:28

0:09:01

0:09:01

0:16:37

0:16:37

1:01:31

1:01:31

0:09:11

0:09:11

0:00:34

0:00:34

0:05:50

0:05:50

0:08:29

0:08:29

1:02:50

1:02:50

0:00:53

0:00:53

0:15:01

0:15:01

0:05:34

0:05:34

0:43:51

0:43:51

0:00:18

0:00:18

0:00:58

0:00:58

0:28:26

0:28:26

0:04:30

0:04:30

0:36:15

0:36:15

0:13:17

0:13:17

0:00:43

0:00:43

0:00:18

0:00:18

0:00:41

0:00:41

0:00:33

0:00:33