filmov

tv

Rotary Positional Embeddings (RoPE): Part 1

Показать описание

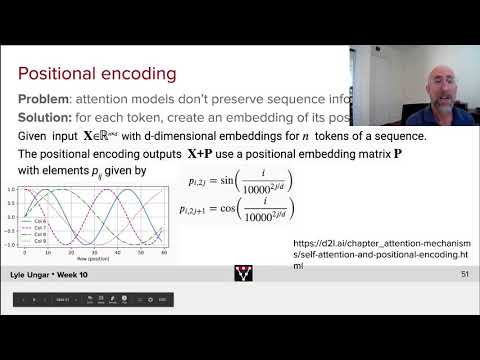

This week we discussed Rotational Positional Embedding (RoPE). Transformers are wonderful models that lie at the heart of most of Generative AI. However, Transformers have issues with learning the relationships between the sequence of words. RoPE enables us to efficiency learn these relationships.

*Links*

*Content*

00:00 Introduction

00:51 Related papers

04:21 Embeddings

12:45 Visualizing RoPE

21:32 Rotary embedding

01:00:06 RoPE properties

01:10:45 Alternative RoPE

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

😊About Us

West Coast Machine Learning is a channel dedicated to exploring the exciting world of machine learning! Our group of techies is passionate about deep learning, neural networks, computer vision, tiny ML, and other cool geeky machine learning topics. We love to dive deep into the technical details and stay up to date with the latest research developments.

Our Meetup group and YouTube channel is the perfect place to connect with other like-minded individuals who share your love of machine learning. We offer a mix of research paper discussions, coding reviews, and other data science topics. So, if you're looking to stay up to date with the latest developments in machine learning, connect with other techies, and learn something new, be sure to subscribe to our channel and join our Meetup community today!

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#Rope #RotaryPositionalEmbeddings #AI #ML #Transformers #Encoding #GenerativeAI #MachineLearning

*Links*

*Content*

00:00 Introduction

00:51 Related papers

04:21 Embeddings

12:45 Visualizing RoPE

21:32 Rotary embedding

01:00:06 RoPE properties

01:10:45 Alternative RoPE

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

😊About Us

West Coast Machine Learning is a channel dedicated to exploring the exciting world of machine learning! Our group of techies is passionate about deep learning, neural networks, computer vision, tiny ML, and other cool geeky machine learning topics. We love to dive deep into the technical details and stay up to date with the latest research developments.

Our Meetup group and YouTube channel is the perfect place to connect with other like-minded individuals who share your love of machine learning. We offer a mix of research paper discussions, coding reviews, and other data science topics. So, if you're looking to stay up to date with the latest developments in machine learning, connect with other techies, and learn something new, be sure to subscribe to our channel and join our Meetup community today!

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#Rope #RotaryPositionalEmbeddings #AI #ML #Transformers #Encoding #GenerativeAI #MachineLearning

0:11:17

0:11:17

1:25:51

1:25:51

0:14:06

0:14:06

0:39:56

0:39:56

0:30:18

0:30:18

1:10:55

1:10:55

0:01:21

0:01:21

1:03:37

1:03:37

1:41:11

1:41:11

0:39:52

0:39:52

0:53:54

0:53:54

0:06:21

0:06:21

0:03:34

0:03:34

1:41:10

1:41:10

0:09:40

0:09:40

0:35:01

0:35:01

0:44:22

0:44:22

0:02:13

0:02:13

0:00:47

0:00:47

0:00:54

0:00:54

0:28:47

0:28:47

0:01:05

0:01:05

3:04:11

3:04:11

0:05:36

0:05:36