filmov

tv

How positional encoding in transformers works?

Показать описание

Positional embeddings in transformers EXPLAINED | Demystifying positional encodings.

Positional Encoding in Transformer Neural Networks Explained

How positional encoding in transformers works?

Transformer Positional Embeddings With A Numerical Example.

Positional Encoding and Input Embedding in Transformers - Part 3

Why do we need Positional Encoding in Transformers?

Postitional Encoding

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

L8-The Transformer: Input Embeddings& Positional Encoding

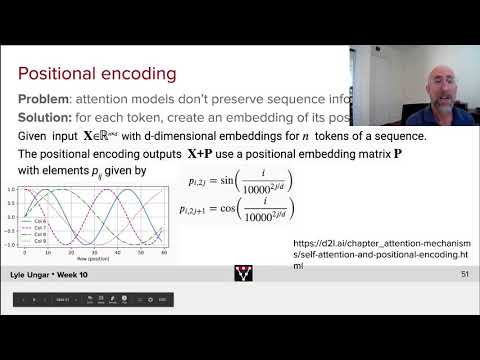

Positional encodings in transformers (NLP817 11.5)

Visual Guide to Transformer Neural Networks - (Episode 1) Position Embeddings

What is Positional Encoding used in Transformers in NLP

What is Positional Encoding in Transformer?

Positional Encoding in Transformers | Deep Learning | CampusX

Illustrated Guide to Transformers Neural Network: A step by step explanation

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Rotary Positional Embeddings: Combining Absolute and Relative

Chatgpt Transformer Positional Embeddings in 60 seconds

What and Why Position Encoding in Transformer Neural Networks

RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs

Position Encoding in Transformer Neural Network

CS 182: Lecture 12: Part 2: Transformers

Transformer Architecture: Fast Attention, Rotary Positional Embeddings, and Multi-Query Attention

Coding Position Encoding in Transformer Neural Networks

Комментарии

0:09:40

0:09:40

0:11:54

0:11:54

0:05:36

0:05:36

0:06:21

0:06:21

0:09:33

0:09:33

0:04:30

0:04:30

0:02:13

0:02:13

0:36:15

0:36:15

0:32:53

0:32:53

0:19:29

0:19:29

0:12:23

0:12:23

0:03:29

0:03:29

0:00:57

0:00:57

1:13:15

1:13:15

0:15:01

0:15:01

0:58:04

0:58:04

0:11:17

0:11:17

0:01:05

0:01:05

0:00:49

0:00:49

0:14:06

0:14:06

0:00:54

0:00:54

0:25:38

0:25:38

0:01:21

0:01:21

0:00:47

0:00:47