filmov

tv

Chatgpt Transformer Positional Embeddings in 60 seconds

Показать описание

Positional Embedding in Transformer Neural Networks, like GPT-3, BERT, BARD and ChatGPT. Welcome to Positional embeddings in sixty seconds.

Positional embeddings are crucial for natural language processing and transformers, providing unique embeddings for each word in a sequence that enable effective processing, and parallelization.

While (RNN) and (LSTM) models have been popular for sequence processing in the past, they have limitations. These models struggle with longer sequences of text, as they require the sequential processing of each word in order. This means you can’t take advantage of parallel processing of inputs, so these architectures take far too long to train.

Additionally, these models struggle with capturing very long-term dependencies in text.

By incorporating positional embeddings into transformers, you can deal with very long sequences and process input words in parallel. This makes the models both better and faster to train.

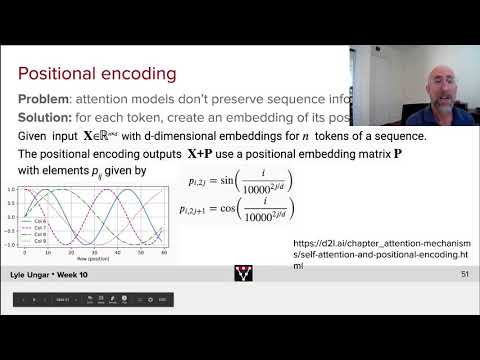

Using sinusoidal functions of different frequencies to map the position of each word to a high-dimensional vector space, positional embeddings are like the GPS of neural networks uniquely identifying where each word is located.

So there you have it, positional embeddings in sixty seconds.

=========================================================================

Link to introductory series on Neural networks:

Link to intro video on 'Backpropagation':

=========================================================================

Transformers are a type of artificial intelligence (AI) used for natural language processing (NLP) tasks, such as translation and summarisation. They were introduced in 2017 by Google researchers, who sought to address the limitations of recurrent neural networks (RNNs), which had traditionally been used for NLP tasks. RNNs had difficulty parallelizing, and tended to suffer from the vanishing/exploding gradient problem, making it difficult to train them with long input sequences.

Transformers address these limitations by using self-attention, a mechanism which allows the model to selectively choose which parts of the input to pay attention to. This makes the model much easier to parallelize and eliminates the vanishing/exploding gradient problem.

Self-attention works by weighting the importance of different parts of the input, allowing the AI to focus on the most relevant information and better handle input sequences of varying lengths. This is accomplished through three matrices: Query (Q), Key (K) and Value (V). The Query matrix can be interpreted as the word for which attention is being calculated, while the Key matrix can be interpreted as the word to which attention is paid. The eigenvalues and eigenvectors of these matrices tend to be similar, and the product of these two matrices gives the attention score.

=====================================================================

#ai #artificialintelligence #deeplearning #chatgpt #gpt3 #neuralnetworks #attention #attentionisallyouneed

Positional embeddings are crucial for natural language processing and transformers, providing unique embeddings for each word in a sequence that enable effective processing, and parallelization.

While (RNN) and (LSTM) models have been popular for sequence processing in the past, they have limitations. These models struggle with longer sequences of text, as they require the sequential processing of each word in order. This means you can’t take advantage of parallel processing of inputs, so these architectures take far too long to train.

Additionally, these models struggle with capturing very long-term dependencies in text.

By incorporating positional embeddings into transformers, you can deal with very long sequences and process input words in parallel. This makes the models both better and faster to train.

Using sinusoidal functions of different frequencies to map the position of each word to a high-dimensional vector space, positional embeddings are like the GPS of neural networks uniquely identifying where each word is located.

So there you have it, positional embeddings in sixty seconds.

=========================================================================

Link to introductory series on Neural networks:

Link to intro video on 'Backpropagation':

=========================================================================

Transformers are a type of artificial intelligence (AI) used for natural language processing (NLP) tasks, such as translation and summarisation. They were introduced in 2017 by Google researchers, who sought to address the limitations of recurrent neural networks (RNNs), which had traditionally been used for NLP tasks. RNNs had difficulty parallelizing, and tended to suffer from the vanishing/exploding gradient problem, making it difficult to train them with long input sequences.

Transformers address these limitations by using self-attention, a mechanism which allows the model to selectively choose which parts of the input to pay attention to. This makes the model much easier to parallelize and eliminates the vanishing/exploding gradient problem.

Self-attention works by weighting the importance of different parts of the input, allowing the AI to focus on the most relevant information and better handle input sequences of varying lengths. This is accomplished through three matrices: Query (Q), Key (K) and Value (V). The Query matrix can be interpreted as the word for which attention is being calculated, while the Key matrix can be interpreted as the word to which attention is paid. The eigenvalues and eigenvectors of these matrices tend to be similar, and the product of these two matrices gives the attention score.

=====================================================================

#ai #artificialintelligence #deeplearning #chatgpt #gpt3 #neuralnetworks #attention #attentionisallyouneed

Комментарии

0:09:40

0:09:40

0:01:05

0:01:05

0:15:46

0:15:46

0:36:15

0:36:15

0:11:54

0:11:54

0:00:57

0:00:57

0:06:21

0:06:21

0:00:54

0:00:54

0:09:33

0:09:33

0:02:13

0:02:13

0:12:23

0:12:23

0:36:45

0:36:45

0:27:14

0:27:14

0:00:47

0:00:47

0:00:55

0:00:55

0:19:29

0:19:29

0:14:06

0:14:06

0:00:49

0:00:49

0:26:10

0:26:10

0:01:07

0:01:07

0:15:01

0:15:01

0:00:44

0:00:44

0:04:55

0:04:55

0:04:30

0:04:30