filmov

tv

Rotary Positional Embeddings: Combining Absolute and Relative

Показать описание

In this video, I explain RoPE - Rotary Positional Embeddings. Proposed in 2022, this innovation is swiftly making its way into prominent language models like Google's PaLM and Meta's LLaMa. I unpack the magic behind rotary embeddings and reveal how they combine the strengths of both absolute and relative positional encodings.

0:00 - Introduction

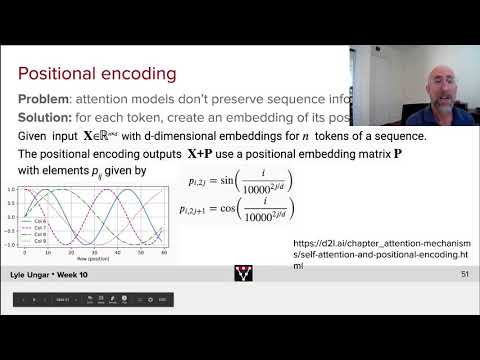

1:22 - Absolute positional embeddings

3:19 - Relative positional embeddings

5:51 - Rotary positional embeddings

7:56 - Matrix formulation

9:31 - Implementation

10:38 - Experiments and conclusion

References:

Rotary Positional Embeddings: Combining Absolute and Relative

RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs

Rotary Positional Embeddings

How Rotary Position Embedding Supercharges Modern LLMs

Transformer Architecture: Fast Attention, Rotary Positional Embeddings, and Multi-Query Attention

Round and Round We Go! What makes Rotary Positional Encodings useful?

LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLU

Rotary Positional Embeddings (RoPE): Part 1

RoFormer: Enhanced Transformer with Rotary Position Embedding Explained

Positional embeddings in transformers EXPLAINED | Demystifying positional encodings.

Position Encoding in Transformer Neural Network

Coding Position Encoding in Transformer Neural Networks

RoPE Rotary Position Embedding to 100K context length

Word Embedding & Position Encoder in Transformer

Transformer Positional Embeddings With A Numerical Example.

ChatGPT Position and Positional embeddings: Transformers & NLP 3

What is Positional Encoding in Transformer?

Postitional Encoding

RoFormer: Enhanced Transformer with Rotary Embedding Presentation + Code Implementation

RoFormer: Enhanced Transformer with Rotary Position Embedding paper review!!

Positional Encoding in Transformer Neural Networks Explained

Coding LLaMA 2 from scratch in PyTorch - KV Cache, Grouped Query Attention, Rotary PE, RMSNorm

Chatgpt Transformer Positional Embeddings in 60 seconds

Extending Transformer Context with Rotary Positional Embeddings

Комментарии

0:11:17

0:11:17

0:14:06

0:14:06

0:30:18

0:30:18

0:13:39

0:13:39

0:01:21

0:01:21

0:32:31

0:32:31

1:10:55

1:10:55

1:25:51

1:25:51

0:39:52

0:39:52

0:09:40

0:09:40

0:00:54

0:00:54

0:00:47

0:00:47

0:39:56

0:39:56

0:00:44

0:00:44

0:06:21

0:06:21

0:15:46

0:15:46

0:00:57

0:00:57

0:02:13

0:02:13

0:44:22

0:44:22

0:53:54

0:53:54

0:11:54

0:11:54

3:04:11

3:04:11

0:01:05

0:01:05

0:08:29

0:08:29