filmov

tv

Positional embeddings in transformers EXPLAINED | Demystifying positional encodings.

Показать описание

What are positional embeddings / encodings?

► Outline:

00:00 What are positional embeddings?

03:39 Requirements for positional embeddings

04:23 Sines, cosines explained: The original solution from the “Attention is all you need” paper

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

NEW (channel update):

🔥 Optionally, pay us a coffee to boost our Coffee Bean production! ☕

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

Paper 📄

Music 🎵 :

---------------------------

🔗 Links:

#AICoffeeBreak #MsCoffeeBean #MachineLearning #AI #research

► Outline:

00:00 What are positional embeddings?

03:39 Requirements for positional embeddings

04:23 Sines, cosines explained: The original solution from the “Attention is all you need” paper

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

NEW (channel update):

🔥 Optionally, pay us a coffee to boost our Coffee Bean production! ☕

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

Paper 📄

Music 🎵 :

---------------------------

🔗 Links:

#AICoffeeBreak #MsCoffeeBean #MachineLearning #AI #research

Positional embeddings in transformers EXPLAINED | Demystifying positional encodings.

Positional Encoding in Transformer Neural Networks Explained

How positional encoding works in transformers?

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Visual Guide to Transformer Neural Networks - (Episode 1) Position Embeddings

RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs

Transformer Positional Embeddings With A Numerical Example.

Rotary Positional Embeddings: Combining Absolute and Relative

Coding the entire LLM Transformer Block

Illustrated Guide to Transformers Neural Network: A step by step explanation

Postitional Encoding

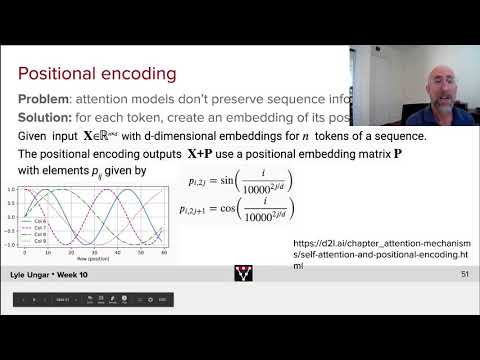

Positional encodings in transformers (NLP817 11.5)

Positional Encoding and Input Embedding in Transformers - Part 3

ChatGPT Position and Positional embeddings: Transformers & NLP 3

Chatgpt Transformer Positional Embeddings in 60 seconds

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Transformer Embeddings - EXPLAINED!

What is Positional Encoding in Transformer?

Arithmetic Transformers with Abacus Positional Embeddings | AI Paper Explained

Attention in transformers, visually explained | Chapter 6, Deep Learning

What is Positional Encoding used in Transformers in NLP

LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLU

Word Embeddings & Positional Encoding in NLP Transformer model explained - Part 1

Transformers, explained: Understand the model behind GPT, BERT, and T5

Комментарии

0:09:40

0:09:40

0:11:54

0:11:54

0:05:36

0:05:36

0:36:15

0:36:15

0:12:23

0:12:23

0:14:06

0:14:06

0:06:21

0:06:21

0:11:17

0:11:17

0:45:06

0:45:06

0:15:01

0:15:01

0:02:13

0:02:13

0:19:29

0:19:29

0:09:33

0:09:33

0:15:46

0:15:46

0:01:05

0:01:05

0:58:04

0:58:04

0:15:43

0:15:43

0:00:57

0:00:57

0:04:52

0:04:52

0:26:10

0:26:10

0:03:29

0:03:29

1:10:55

1:10:55

0:21:31

0:21:31

0:09:11

0:09:11