filmov

tv

Transformers and Positional Embedding: A Step-by-Step NLP Tutorial for Mastery

Показать описание

🚀 Introduction to Transformers in NLP | PositionalEmbedding Layer 🚀

Discover the powerful world of Transformers in Natural Language Processing (NLP)! In this tutorial, we explore the main components of the Transformer architecture, with a focus on the essential "PositionalEmbedding" layer.

🎯 Learn the Advantages and Limitations of Transformers.

🎓 Implement Positional Encoding and Understand its Significance.

💡 Build a Custom "PositionalEmbedding" Layer in TensorFlow.

💻 Test the Layer with Random Input Sequences.

Unleash the potential of Transformers in NLP and level up your machine learning skills. Subscribe now for more exciting tutorials on building practical Transformer models for real-world NLP tasks! 🌟

#Transformers #NLP #MachineLearning #Tutorial #PositionalEmbedding #TensorFlow

Discover the powerful world of Transformers in Natural Language Processing (NLP)! In this tutorial, we explore the main components of the Transformer architecture, with a focus on the essential "PositionalEmbedding" layer.

🎯 Learn the Advantages and Limitations of Transformers.

🎓 Implement Positional Encoding and Understand its Significance.

💡 Build a Custom "PositionalEmbedding" Layer in TensorFlow.

💻 Test the Layer with Random Input Sequences.

Unleash the potential of Transformers in NLP and level up your machine learning skills. Subscribe now for more exciting tutorials on building practical Transformer models for real-world NLP tasks! 🌟

#Transformers #NLP #MachineLearning #Tutorial #PositionalEmbedding #TensorFlow

Positional embeddings in transformers EXPLAINED | Demystifying positional encodings.

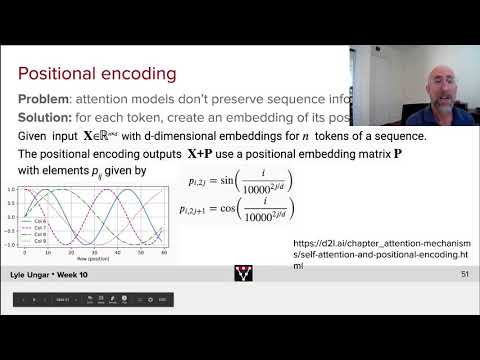

How positional encoding works in transformers?

Transformer Positional Embeddings With A Numerical Example.

Positional Encoding in Transformer Neural Networks Explained

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Vision Transformer Quick Guide - Theory and Code in (almost) 15 min

Chatgpt Transformer Positional Embeddings in 60 seconds

Arithmetic Transformers with Abacus Positional Embeddings | AI Paper Explained

Gen AI Transformers - Input Embedding and Positional Encoding

Visual Guide to Transformer Neural Networks - (Episode 1) Position Embeddings

Postitional Encoding

What is Positional Encoding in Transformer?

Positional embedding in Transformers

Illustrated Guide to Transformers Neural Network: A step by step explanation

Pytorch for Beginners #30 | Transformer Model - Position Embeddings

Attention in transformers, step-by-step | DL6

Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

Positional Encoding and Input Embedding in Transformers - Part 3

RoPE (Rotary positional embeddings) explained: The positional workhorse of modern LLMs

Transformers and Positional Embedding: A Step-by-Step NLP Tutorial for Mastery

Word Embedding & Position Encoder in Transformer

ChatGPT Position and Positional embeddings: Transformers & NLP 3

How Rotary Position Embedding Supercharges Modern LLMs

Rotary Positional Embeddings: Combining Absolute and Relative

Комментарии

0:09:40

0:09:40

0:05:36

0:05:36

0:06:21

0:06:21

0:11:54

0:11:54

0:36:15

0:36:15

0:16:51

0:16:51

0:01:05

0:01:05

0:04:52

0:04:52

0:00:15

0:00:15

0:12:23

0:12:23

0:02:13

0:02:13

0:00:57

0:00:57

0:23:41

0:23:41

0:15:01

0:15:01

0:11:22

0:11:22

0:26:10

0:26:10

0:58:04

0:58:04

0:09:33

0:09:33

0:14:06

0:14:06

0:33:11

0:33:11

0:00:44

0:00:44

0:15:46

0:15:46

0:13:39

0:13:39

0:11:17

0:11:17