filmov

tv

Adjoint Sensitivities over nonlinear equation with JAX Automatic Differentiation

Показать описание

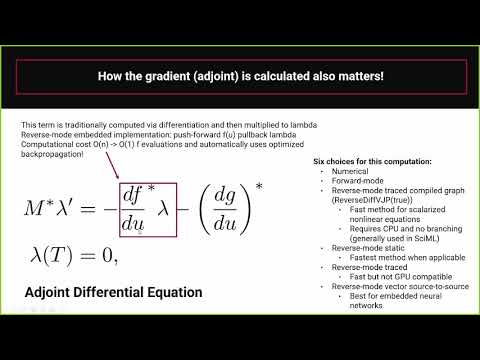

Taking derivatives is tiring, especially if the underlying function is complicated and nested. We can simplify this error-prone process by employing Algorithmic/Automatic Differentiation (sometimes also called backpropagation), a programming paradigm that is able to produce derivatives to machine precision for computer programs.

Each modern deep learning framework like TensorFlow, PyTorch or JAX is essentially a combination of high performance array based computing (on accelerators like GPUs) and a tool for Automatic Differentiation. We can not only use that for evaluating and training deep Neural Networks, but also for the additional derivative quantities in forward or adjoint sensitivity methods.

-------

-------

Timestamps:

00:00 Intro

00:35 Recap on sensitivities for Nonlinear Equations

00:59 Additional derivative information

01:43 Status Quo

02:05 Change to JAX NumPy

03:00 Use JAX Automatic Differentiation

05:24 Double precision floating points in JAX

06:25 Outro

Adjoint Sensitivities over nonlinear equation with JAX Automatic Differentiation

Using JAX Jacobians for Adjoint Sensitivities over Nonlinear Systems of Equations

Adjoint Sensitivities of a Non-Linear system of equations | Full Derivation

Python Example: Adjoint Sensitivities over nonlinear SYSTEMS of equations

Adjoint State Method for an ODE | Adjoint Sensitivity Analysis

Python Example for Adjoint Sensitivities of Nonlinear Equation

Lagrangian Perspective on the Derivation of Adjoint Sensitivities of Nonlinear Systems

Lecture 4 Part 2: Nonlinear Root Finding, Optimization, and Adjoint Gradient Methods

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Nonlinear Systems

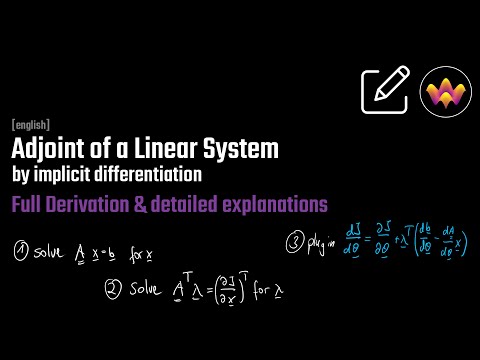

Adjoint Equation of a Linear System of Equations - by implicit derivative

Adjoint Sensitivities of a Linear System of Equations - derived using the Lagrangian

Lecture 6 Part 1: Adjoint Differentiation of ODE Solutions

A Comparison of Automatic Differentiation and Adjoints for Derivatives of Differential Equations

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Poisson's equation

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Linear Algebraic Systems

Adjoint for implicit governing equations

Linear Operators and their Adjoints

Introduction to the adjoint method

SUNDIALS: Suite of Nonlinear & Differential Algebraic Equation Solvers | Carol Woodward, LLNL

Adjoint for steady PDEs

EE-565: Lecture-20 (Nonlinear Control Systems): Sensitivity Equation

Neural Ordinary Differential Equations

A simplistic first example of the adjoint method

Adjoint Sensitivities in Julia with Zygote & ChainRules

Комментарии

0:07:35

0:07:35

0:12:53

0:12:53

0:27:14

0:27:14

0:29:38

0:29:38

0:43:27

0:43:27

0:22:01

0:22:01

0:15:52

0:15:52

0:44:26

0:44:26

0:12:53

0:12:53

0:28:47

0:28:47

0:17:38

0:17:38

0:58:21

0:58:21

0:12:07

0:12:07

0:09:54

0:09:54

0:12:07

0:12:07

0:03:35

0:03:35

0:34:03

0:34:03

0:07:25

0:07:25

0:30:41

0:30:41

0:13:54

0:13:54

1:00:37

1:00:37

0:22:19

0:22:19

0:07:11

0:07:11

0:20:15

0:20:15