filmov

tv

Adjoint Sensitivities of a Non-Linear system of equations | Full Derivation

Показать описание

The Linear System of Equations is a special case of a non-linear system of equations. Let's use the knowledge we obtained in the previous to also derive the adjoint problem in the more general case.

-------

-------

Timestamps:

00:00 Introduction

01:04 Big Non-Linear Systems

01:57 Scalar-Valued Loss Function

02:36 Parameters involved

03:07 Dimensions

03:56 Total derivative

04:22 Dimensions & row-vector gradients

05:46 Difficult Quantity

06:09 Implicit Differentiation

08:41 Plug back in

09:23 Two ways of bracketing

11:26 Identifying the adjoint

13:24 Adjoint System (is linear)

15:09 Strategy for obtaining the sensitivities

16:52 Remarks

19:52 Comparing against linear systems

21:17 Total and partial derivatives

26:31 Outro

Adjoint Sensitivities of a Non-Linear system of equations | Full Derivation

Adjoint State Method for an ODE | Adjoint Sensitivity Analysis

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Nonlinear Systems

Adjoint Sensitivities over nonlinear equation with JAX Automatic Differentiation

Python Example: Adjoint Sensitivities over nonlinear SYSTEMS of equations

Using JAX Jacobians for Adjoint Sensitivities over Nonlinear Systems of Equations

Lagrangian Perspective on the Derivation of Adjoint Sensitivities of Nonlinear Systems

Lecture 4 Part 2: Nonlinear Root Finding, Optimization, and Adjoint Gradient Methods

Python Example for Adjoint Sensitivities of Nonlinear Equation

Lecture 6 Part 1: Adjoint Differentiation of ODE Solutions

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Linear Algebraic Systems

Automatic differentiation | New derivations of nonlinear solve and ODE adjoints

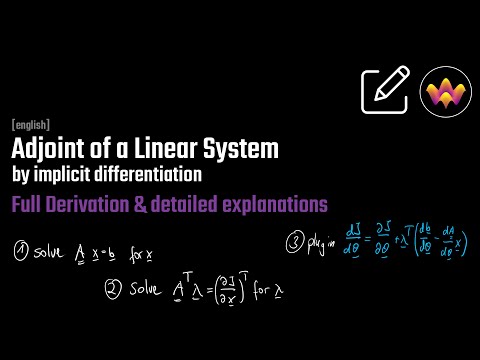

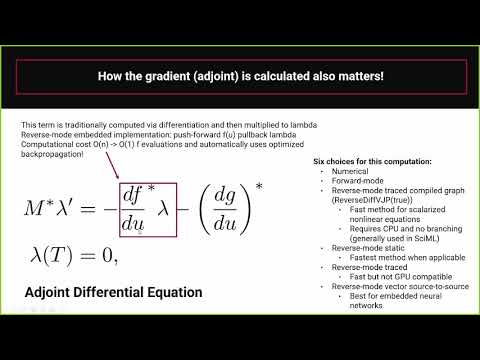

Adjoint Equation of a Linear System of Equations - by implicit derivative

SUNDIALS: Suite of Nonlinear & Differential Algebraic Equation Solvers | Carol Woodward, LLNL

Adjoint Sensitivities of a Linear System of Equations - derived using the Lagrangian

Second-order adjoint-based sensitivity for hydrodynamic stability and control

A simplistic first example of the adjoint method

SUNDIALS: Suite of Nonlinear & Differential/Algebraic Equation Solvers | C. Woodward, LLNL

Differentiable Programming Part 2: Adjoint Derivation for (Neural) ODEs and Nonlinear Solve

Python Example for the Adjoint Sensitivities of a Linear System | Full Details & Timings

Adjoint for implicit governing equations

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Poisson's equation

A Comparison of Automatic Differentiation and Adjoints for Derivatives of Differential Equations

Composition with the Adjoint method

Комментарии

0:27:14

0:27:14

0:43:27

0:43:27

0:12:53

0:12:53

0:07:35

0:07:35

0:29:38

0:29:38

0:12:53

0:12:53

0:15:52

0:15:52

0:44:26

0:44:26

0:22:01

0:22:01

0:58:21

0:58:21

0:12:07

0:12:07

1:19:25

1:19:25

0:28:47

0:28:47

0:30:41

0:30:41

0:17:38

0:17:38

0:14:59

0:14:59

0:07:11

0:07:11

0:27:37

0:27:37

1:36:11

1:36:11

0:43:35

0:43:35

0:03:35

0:03:35

0:09:54

0:09:54

0:12:07

0:12:07

0:10:43

0:10:43