filmov

tv

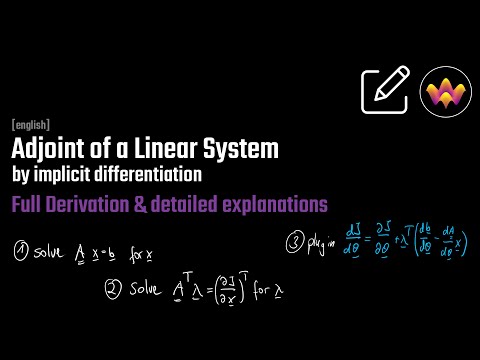

Adjoint Sensitivities of a Linear System of Equations - derived using the Lagrangian

Показать описание

We can also arrive at the equations for the adjoint sensitivities of a linear system using a different point of view. Here, we frame it as an equality-constrained optimization problem. Then, we can build a Lagrangian of the problem, which total derivative is identical to the sensitivities, we are interested in. The involved Lagrange Multiplier can be chosen arbitrarily, since the primal feasible already constraints our minimum. So, let's choose in a way such that we avoid the computation of a difficult quantity. And that's all the magic! :) After some more manipulation, we arrive at the same equations as in the previous video.

-------

-------

Timestamps:

00:00 Introduction

00:49 Similar to using implicit differentiation

01:15 Implicit Relation

01:48 Dimensions of the quantities

02:26 Lagrangian for Equality-Constrained Optimization

03:37 Total derivative of Lagrangian

05:02 Gradient is a row vector

07:31 The difficult quantity

08:33 Clever Rearranging

09:27 Making a coefficient zero

10:31 The adjoint system

12:01 The gradient is now easier

12:37 Total derivative of Loss

14:35 Strategy for d_J/d_theta

15:47 Scales constantly in the number of parameters

16:27 The derivatives left in the equation

17:01 Outro

Adjoint Sensitivities of a Linear System of Equations - derived using the Lagrangian

Python Example for the Adjoint Sensitivities of a Linear System | Full Details & Timings

Adjoint Equation of a Linear System of Equations - by implicit derivative

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Linear Algebraic Systems

Adjoint State Method for an ODE | Adjoint Sensitivity Analysis

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Nonlinear Systems

Adjoint Sensitivities of a Non-Linear system of equations | Full Derivation

MIT Numerical Methods for PDEs Lecture 18: Adjoint Sensitivity Analysis of Poisson's equation

'Lattice Hamiltonian for Adjoint QCD2', Ross Dempsey

Adjoint for implicit governing equations

Adjoint Method

Adjoint Sensitivities over nonlinear equation with JAX Automatic Differentiation

Second-order adjoint-based sensitivity for hydrodynamic stability and control

Adjoint sensitivity to QGPV and to Ertel PV

Lagrangian Perspective on the Derivation of Adjoint Sensitivities of Nonlinear Systems

Python Example for Adjoint Sensitivities of Nonlinear Equation

Introduction to the adjoint method

Composition with the Adjoint method

Using JAX Jacobians for Adjoint Sensitivities over Nonlinear Systems of Equations

Neural Ordinary Differential Equations With DiffEqFlux | Jesse Bettencourt | JuliaCon 2019

Adjoint of a method

Python Example: Adjoint Sensitivities over nonlinear SYSTEMS of equations

SUNDIALS: Suite of Nonlinear & Differential/Algebraic Equation Solvers | C. Woodward, LLNL

Inverse Problems 17: Why does the adjoint state method work?

Комментарии

0:17:38

0:17:38

0:43:35

0:43:35

0:28:47

0:28:47

0:12:07

0:12:07

0:43:27

0:43:27

0:12:53

0:12:53

0:27:14

0:27:14

0:09:54

0:09:54

0:40:49

0:40:49

0:03:35

0:03:35

0:05:19

0:05:19

0:07:35

0:07:35

0:14:59

0:14:59

0:16:18

0:16:18

0:15:52

0:15:52

0:22:01

0:22:01

0:07:25

0:07:25

0:10:43

0:10:43

0:12:53

0:12:53

0:14:29

0:14:29

0:15:42

0:15:42

0:29:38

0:29:38

0:27:37

0:27:37

0:08:02

0:08:02