filmov

tv

Neural Ordinary Differential Equations

Показать описание

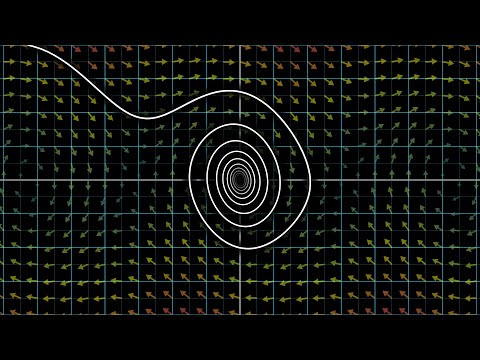

Abstract:

We introduce a new family of deep neural network models. Instead of specifying a discrete sequence of hidden layers, we parameterize the derivative of the hidden state using a neural network. The output of the network is computed using a black-box differential equation solver. These continuous-depth models have constant memory cost, adapt their evaluation strategy to each input, and can explicitly trade numerical precision for speed. We demonstrate these properties in continuous-depth residual networks and continuous-time latent variable models. We also construct continuous normalizing flows, a generative model that can train by maximum likelihood, without partitioning or ordering the data dimensions. For training, we show how to scalably backpropagate through any ODE solver, without access to its internal operations. This allows end-to-end training of ODEs within larger models.

Authors:

Ricky T. Q. Chen, Yulia Rubanova, Jesse Bettencourt, David Duvenaud

Neural Ordinary Differential Equations

Neural ordinary differential equations - NODEs (DS4DS 4.07)

Neural Ordinary Differential Equations

ODE | Neural Ordinary Differential Equations - Best Paper Awards NeurIPS

Neural ODEs (NODEs) [Physics Informed Machine Learning]

Neural Differential Equations

Programming for AI (AI504, Fall 2020), Class 14: Neural Ordinary Differential Equations

Programming for AI (AI504, Fall 2020), Practice 14: Neural Ordinary Differential Equations

NeuroDynamics.jl: Next generation models in neuroscience | ElGazzar | JuliaCon 2024

Neural ordinary differential equations

On Neural Differential Equations

Neural Ordinary Differential Equations – Александра Волохова

David Duvenaud | Reflecting on Neural ODEs | NeurIPS 2019

Differential equations, a tourist's guide | DE1

Bayesian Neural Ordinary Differential Equations | Raj Dandekar | JuliaCon2021

Neural Ordinary Differential Equations

[Paper Review] Neural Ordinary Differential Equations (Neural ODE)

Neural Ordinary Differential Equations With DiffEqFlux | Jesse Bettencourt | JuliaCon 2019

Fitting Neural Ordinary Differential Equations With DiffeqFlux.jl | Elisabeth Roesch | JuliaCon 2019

Neural Ordinary Differential Equation

David Duvenaud: Neural Ordinary Equations

Olof Mogren: Neural ordinary differential equations

Neural ordinary differential equations

Neural Ordinary Differential Equations | SAiDL | Reading Session

Комментарии

0:22:19

0:22:19

0:18:16

0:18:16

0:35:33

0:35:33

0:12:00

0:12:00

0:24:37

0:24:37

0:35:18

0:35:18

1:19:35

1:19:35

0:30:10

0:30:10

0:09:55

0:09:55

0:36:38

0:36:38

1:06:36

1:06:36

0:17:05

0:17:05

0:21:02

0:21:02

0:27:16

0:27:16

0:23:20

0:23:20

0:45:31

0:45:31

![[Paper Review] Neural](https://i.ytimg.com/vi/UegW1cIRee4/hqdefault.jpg) 0:39:56

0:39:56

0:14:29

0:14:29

0:29:29

0:29:29

0:03:42

0:03:42

0:30:51

0:30:51

0:36:38

0:36:38

0:36:38

0:36:38

0:38:42

0:38:42