filmov

tv

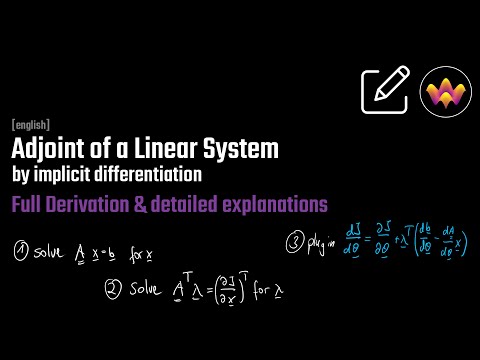

Adjoint Equation of a Linear System of Equations - by implicit derivative

Показать описание

How can you efficiently get the derivatives of a loss function with respect to some parameter vectors if intermediate computation stages involve the solution of a linear system of equations, which is an implicit problem. If you use Automatic Differentiation, you might be inclined to just propagate through the solver of the linear system, e.g., by LU or QR decomposition for dense matrices or an iterative solver for sparse systems. However, this approach introduces many problems that make an efficient application of it infeasible (e.g., vanishing/exploding gradients, high memory requirements for solving intermediary values in reverse-mode). The remedy is to perform some clever bracketing which allows framing the task of taking derivatives as the solution to another linear system of equations, the adjoint system. This adjoint system is created easily and involves the transpose of the system matrix, which is by the way the reason the transpose of a matrix is also called its adjoint.

-------

-------

Timestamps:

00:00 Introduction

01:50 Sensitivities

03:04 Implicit Relations vs. Automatics Differentiation

03:57 Dimensions of the variables

04:22 A (scalar-valued) loss function

05:00 Example for a loss function

05:39 Solution also depends on parameters

06:14 Gradient as Total Derivative

07:37 Gradient is a row vector

09:05 The difficult quantity

09:39 Implicit Derivation

11:06 A naive approach

13:41 Problem of the naive approach

16:49 Remedy: Adjoint Method

19:04 Clever Bracketing

19:54 The adjoint variable

21:18 The adjoint system

22:13 Similar Complexity

22:44 Dimension of the adjoint

23:24 Strategy for loss gradient

25:03 Important finding

25:52 When to use adjoint?

26:33 How to get the other derivatives?

27:55 Outlook: Nested linear systems

28:09 Outro

Комментарии

0:28:47

0:28:47

0:13:16

0:13:16

0:06:23

0:06:23

0:58:21

0:58:21

0:09:01

0:09:01

0:44:26

0:44:26

0:24:44

0:24:44

0:03:35

0:03:35

0:37:23

0:37:23

0:07:32

0:07:32

0:06:54

0:06:54

0:56:59

0:56:59

0:07:25

0:07:25

0:05:00

0:05:00

0:43:27

0:43:27

0:15:34

0:15:34

0:01:54

0:01:54

0:03:06

0:03:06

0:00:52

0:00:52

0:10:46

0:10:46

0:01:56

0:01:56

0:06:25

0:06:25

0:44:50

0:44:50

0:06:25

0:06:25