filmov

tv

AMMI Course 'Geometric Deep Learning' - Lecture 9 (Manifolds & Meshes) - Michael Bronstein

Показать описание

Video recording of the course "Geometric Deep Learning" taught in the African Master in Machine Intelligence in July-August 2021 by Michael Bronstein (Imperial College/Twitter), Joan Bruna (NYU), Taco Cohen (Qualcomm), and Petar Veličković (DeepMind)

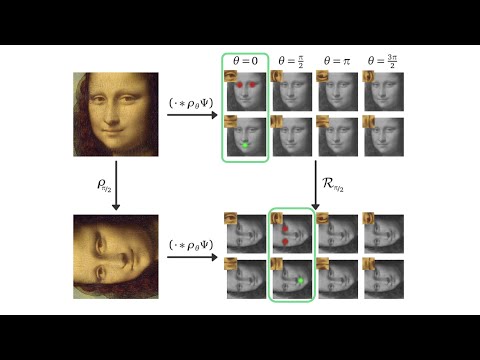

Lecture 9: Euclidean vs Non-Euclidean convolution • Manifolds • Tangent vectors • Riemannian metric • Geodesics • Parallel transport • Exponential map • Convolution on manifolds • Domain deformation • Pushfowards and Pullback • Isometries • Deformation invariance • Scalar and vector fields • Gradient, Divergence, and Laplacian operators • Heat and Wave equations • Manifold Fourier transform • Spectral convolution • Meshes • Discrete Laplacians • ChebNet • Graph Convolutional Network • sGCN • SIGN

Lecture 9: Euclidean vs Non-Euclidean convolution • Manifolds • Tangent vectors • Riemannian metric • Geodesics • Parallel transport • Exponential map • Convolution on manifolds • Domain deformation • Pushfowards and Pullback • Isometries • Deformation invariance • Scalar and vector fields • Gradient, Divergence, and Laplacian operators • Heat and Wave equations • Manifold Fourier transform • Spectral convolution • Meshes • Discrete Laplacians • ChebNet • Graph Convolutional Network • sGCN • SIGN

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 1 (Introduction) - Michael Bronstein

AMMI Course 'Geometric Deep Learning' - Lecture 1 (Introduction) - Michael Bronstein

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 12 (Applications & Trends) - Mich...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 4 (Geometric Priors II) - Joan Bruna

AMMI Course 'Geometric Deep Learning' - Lecture 2 (Learning in High Dimensions) - Joan Bru...

AMMI Course 'Geometric Deep Learning' - Lecture 12 (Applications & Conclusions) - Mich...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 2 (Learning in High Dimensions) - Joa...

AMMI Course 'Geometric Deep Learning' - Lecture 4 (Geometric Priors II) - Joan Bruna

AMMI Course 'Geometric Deep Learning' - Lecture 7 (Grids) - Joan Bruna

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 7 (Grids) - Joan Bruna

AMMI Course 'Geometric Deep Learning' - Lecture 6 (Graphs & Sets II) - Petar Veličkovi...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 9 (Manifolds) - Michael Bronstein

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 1 (Physics-based GNNs) - Francesco Di...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 2 (Learning in High Dimensions) - Joa...

AMMI Course 'Geometric Deep Learning' - Lecture 10 (Gauges) - Taco Cohen

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 3 (Equivariance in ML) - Geordie Will...

AMMI Course 'Geometric Deep Learning' - Lecture 8 (Groups & Homogeneous spaces) - Taco...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 6 (Graphs & Sets II) - Petar Veli...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 8 (Groups & Homogeneous spaces) -...

AMMI Course 'Geometric Deep Learning' - Lecture 9 (Manifolds & Meshes) - Michael Brons...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 3 (Geometric Priors I) - Taco Cohen

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 11 (Beyond Groups) - Petar Veličković...

ICLR 2021 Keynote - 'Geometric Deep Learning: The Erlangen Programme of ML' - M Bronstein

AMMI Course 'Geometric Deep Learning' - Lecture 11 (Sequences & Time Warping) - Petar ...

Комментарии

1:14:59

1:14:59

0:59:12

0:59:12

1:11:00

1:11:00

0:53:37

0:53:37

1:00:10

1:00:10

0:58:12

0:58:12

1:22:05

1:22:05

1:02:29

1:02:29

1:06:52

1:06:52

1:05:21

1:05:21

1:03:09

1:03:09

1:14:01

1:14:01

1:12:39

1:12:39

1:19:40

1:19:40

1:03:52

1:03:52

1:01:52

1:01:52

1:05:44

1:05:44

0:59:12

0:59:12

1:11:51

1:11:51

1:22:43

1:22:43

0:56:48

0:56:48

1:15:33

1:15:33

0:38:27

0:38:27

0:51:00

0:51:00