filmov

tv

AMMI Course 'Geometric Deep Learning' - Lecture 11 (Sequences & Time Warping) - Petar Veličković

Показать описание

Video recording of the course "Geometric Deep Learning" taught in the African Master in Machine Intelligence in July-August 2021 by Michael Bronstein (Imperial College/Twitter), Joan Bruna (NYU), Taco Cohen (Quallcom), and Petar Veličković (DeepMind)

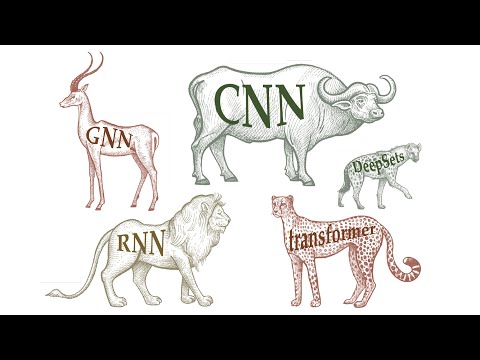

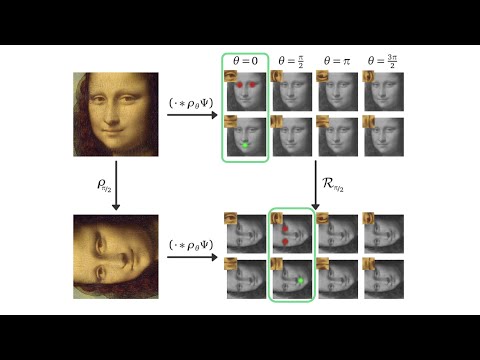

Lecture 11: Static and dynamic domains • Recurrent Neural Networks • Translation invariance • Time warping • Warping the ODE • Discrete Warped RNNs • Gated RNNs • LSTM

Lecture 11: Static and dynamic domains • Recurrent Neural Networks • Translation invariance • Time warping • Warping the ODE • Discrete Warped RNNs • Gated RNNs • LSTM

AMMI Course 'Geometric Deep Learning' - Lecture 1 (Introduction) - Michael Bronstein

AMMI Course 'Geometric Deep Learning' - Lecture 11 (Sequences & Time Warping) - Petar ...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 1 (Introduction) - Michael Bronstein

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 8 (Groups & Homogeneous spaces) -...

AMMI Course 'Geometric Deep Learning' - Lecture 3 (Geometric Priors I) - Taco Cohen

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 1 (Physics-based GNNs) - Francesco Di...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 11 (Beyond Groups) - Petar Veličković...

AMMI Course 'Geometric Deep Learning' - Lecture 8 (Groups & Homogeneous spaces) - Taco...

AMMI Course 'Geometric Deep Learning' - Lecture 4 (Geometric Priors II) - Joan Bruna

AMMI Course 'Geometric Deep Learning' - Lecture 6 (Graphs & Sets II) - Petar Veličkovi...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 5 (Graphs & Sets) - Petar Veličko...

AMMI Course 'Geometric Deep Learning' - Lecture 12 (Applications & Conclusions) - Mich...

AMMI Course 'Geometric Deep Learning' - Lecture 9 (Manifolds & Meshes) - Michael Brons...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 9 (Manifolds) - Michael Bronstein

AMMI Course 'Geometric Deep Learning' - Lecture 7 (Grids) - Joan Bruna

AMMI Course 'Geometric Deep Learning' - Lecture 10 (Gauges) - Taco Cohen

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 12 (Applications & Trends) - Mich...

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 2 (Subgraph GNNs) - Fabrizio Frasca

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 3 (Geometric Priors I) - Taco Cohen

AMMI Course 'Geometric Deep Learning' - Lecture 5 (Graphs & Sets I) - Petar Veličković...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 6 (Graphs & Sets II) - Petar Veli...

AMMI Course 'Geometric Deep Learning' - Lecture 2 (Learning in High Dimensions) - Joan Bru...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 4 (Geometric Priors II) - Joan Bruna

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 2 (Learning in High Dimensions) - Joa...

Комментарии

0:59:12

0:59:12

0:51:00

0:51:00

1:14:59

1:14:59

1:11:51

1:11:51

1:02:04

1:02:04

1:12:39

1:12:39

1:15:33

1:15:33

1:05:44

1:05:44

1:02:29

1:02:29

1:03:09

1:03:09

1:01:37

1:01:37

0:58:12

0:58:12

1:22:43

1:22:43

1:14:01

1:14:01

1:06:52

1:06:52

1:03:52

1:03:52

1:11:00

1:11:00

1:00:24

1:00:24

0:56:48

0:56:48

1:03:53

1:03:53

0:59:12

0:59:12

1:00:10

1:00:10

0:53:37

0:53:37

1:19:40

1:19:40