filmov

tv

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 2 (Learning in High Dimensions) - Joan Bruna

Показать описание

Video recording of the course "Geometric Deep Learning" taught in the African Master in Machine Intelligence in July 2022 by Michael Bronstein (Oxford), Joan Bruna (NYU), Taco Cohen (Qualcomm), and Petar Veličković (DeepMind)

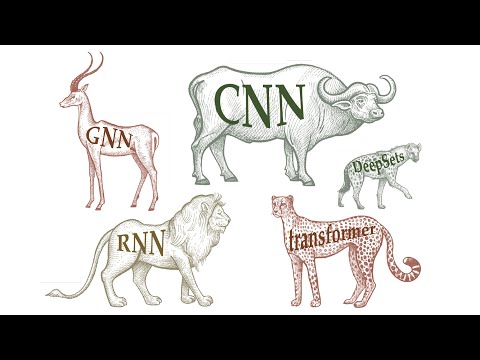

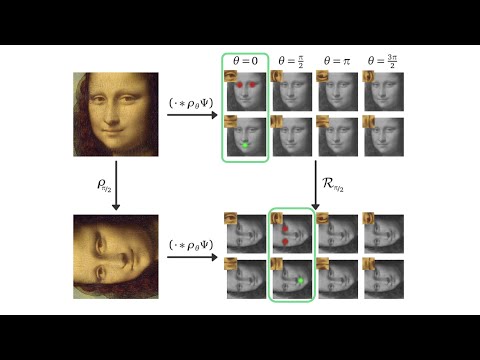

Lecture 2: Basic notions in learning • Challenges of learning in high dimension • Learning Lipschitz functions • Universal approximation

Lecture 2: Basic notions in learning • Challenges of learning in high dimension • Learning Lipschitz functions • Universal approximation

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 1 (Physics-based GNNs) - Francesco Di...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 1 (Introduction) - Michael Bronstein

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 4 (Geometric Priors II) - Joan Bruna

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 11 (Beyond Groups) - Petar Veličković...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 8 (Groups & Homogeneous spaces) -...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 5 (Graphs & Sets) - Petar Veličko...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 3 (Geometric Priors I) - Taco Cohen

AMMI Course 'Geometric Deep Learning' - Lecture 4 (Geometric Priors II) - Joan Bruna

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 2 (Subgraph GNNs) - Fabrizio Frasca

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 2 (Learning in High Dimensions) - Joa...

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 3 (Equivariance in ML) - Geordie Will...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 7 (Grids) - Joan Bruna

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 9 (Manifolds) - Michael Bronstein

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 5 (AlphaFold) - Russ Bates

AMMI Course 'Geometric Deep Learning' - Lecture 1 (Introduction) - Michael Bronstein

AMMI Course 'Geometric Deep Learning' - Lecture 3 (Geometric Priors I) - Taco Cohen

AMMI 2022 Course 'Geometric Deep Learning' - Seminar 4 (Neural Sheaf Diffusion) - Cristian...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 10 (Gauges) - Taco Cohen

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 6 (Graphs & Sets II) - Petar Veli...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 12 (Applications & Trends) - Mich...

AMMI 2022 Course 'Geometric Deep Learning' - Lecture 2 (Learning in High Dimensions) - Joa...

AMMI Course 'Geometric Deep Learning' - Lecture 8 (Groups & Homogeneous spaces) - Taco...

AMMI Course 'Geometric Deep Learning' - Lecture 7 (Grids) - Joan Bruna

AMMI Course 'Geometric Deep Learning' - Lecture 5 (Graphs & Sets I) - Petar Veličković...

Комментарии

1:12:39

1:12:39

1:14:59

1:14:59

0:53:37

0:53:37

1:15:33

1:15:33

1:11:51

1:11:51

1:01:37

1:01:37

0:56:48

0:56:48

1:02:29

1:02:29

1:00:24

1:00:24

1:19:40

1:19:40

1:01:52

1:01:52

1:05:21

1:05:21

1:14:01

1:14:01

0:55:50

0:55:50

0:59:12

0:59:12

1:02:04

1:02:04

0:52:51

0:52:51

0:46:54

0:46:54

0:59:12

0:59:12

1:11:00

1:11:00

1:22:05

1:22:05

1:05:44

1:05:44

1:06:52

1:06:52

1:03:53

1:03:53