filmov

tv

Advanced Hyperparameter Optimization for Deep Learning with MLflow - Maneesh Bhide Databricks

Показать описание

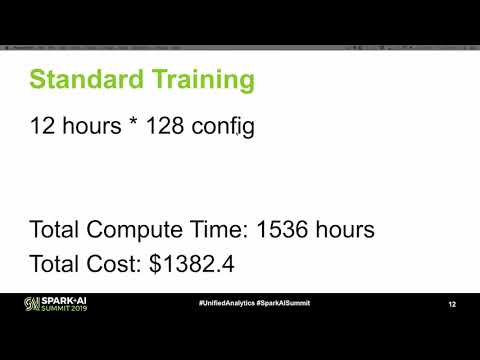

Building on the "Best Practices for Hyperparameter Tuning with MLflow" talk, we will present advanced topics in HPO for deep learning, including early stopping, multi-metric optimization, and robust optimization. We will then discuss implementations using open source tools. Finally, we will discuss how we can leverage MLflow with these tools and techniques to analyze the performance of our models.

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

Advanced Hyperparameter Optimization for Deep Learning with MLflow - Maneesh Bhide Databricks

Practical approaches for efficient hyperparameter optimization with Oríon | SciPy 2021

How Should you Architect Your Keras Neural Network: Hyperparameters (8.3)

Advanced Methods for Hyperparameter Tuning

Mastering Hyperparameter Tuning with Optuna: Boost Your Machine Learning Models!

Hyperparameter Tuning for Improving Model Accuracy #ai #datascience #learnwithav #hyperparameter

Hyperparameter Optimization - The Math of Intelligence #7

AutoML20: A Modern Guide to Hyperparameter Optimization

Build a Full Machine Learning Project With Python and XGBoost: Airline Flight Delay Prediction

Maximize accuracy of your ML model with advanced hyperparameter tuning strategies - AWS

Bayesian Hyperparameter Optimization for PyTorch (8.4)

XGBoost's Most Important Hyperparameters

Simple Methods for Hyperparameter Tuning

Richard Liaw: A Guide to Modern Hyperparameters Turning Algorithms | PyData LA 2019

Better and Faster Hyper Parameter Optimization with Dask | SciPy 2019 | Scott Sievert

Practical Approaches for Efficient Hyperparameter Optimization

9b Machine Learning Algorithms Advanced Hyperparameter Tuning of Machine Learning Algorithms

Master Optuna: Advanced Hyperparameter Tuning for Machine Learning (Step-by-Step)

Hyperparameters Optimization - Michael Ringenburg & Ben Albrecht

Meetup Deep Learning Italia 19/05/2020 - Hyperband: Approach to Hyperparameter Optimization

Week 2 - A Modern Guide to Hyperparameter Optimization - Richard Liaw

11-785 Spring 2023 Recitation 2: Network Optimization, Hyperparameter Tuning

Hyperparameters Optimization Strategies: GridSearch, Bayesian, & Random Search (Beginner Friendl...

Hyperparameter Tuning in Deep Learning by Simran Anand

Комментарии

0:43:54

0:43:54

0:30:59

0:30:59

0:14:33

0:14:33

0:07:32

0:07:32

0:28:15

0:28:15

0:00:57

0:00:57

0:09:51

0:09:51

0:37:55

0:37:55

0:12:56

0:12:56

0:52:07

0:52:07

0:09:06

0:09:06

0:06:28

0:06:28

0:06:46

0:06:46

0:38:11

0:38:11

0:27:33

0:27:33

1:14:25

1:14:25

0:15:01

0:15:01

0:23:30

0:23:30

0:18:40

0:18:40

0:41:02

0:41:02

0:31:25

0:31:25

1:11:59

1:11:59

0:08:02

0:08:02

0:28:43

0:28:43