filmov

tv

Linear Differential Equations & the Method of Integrating Factors

Показать описание

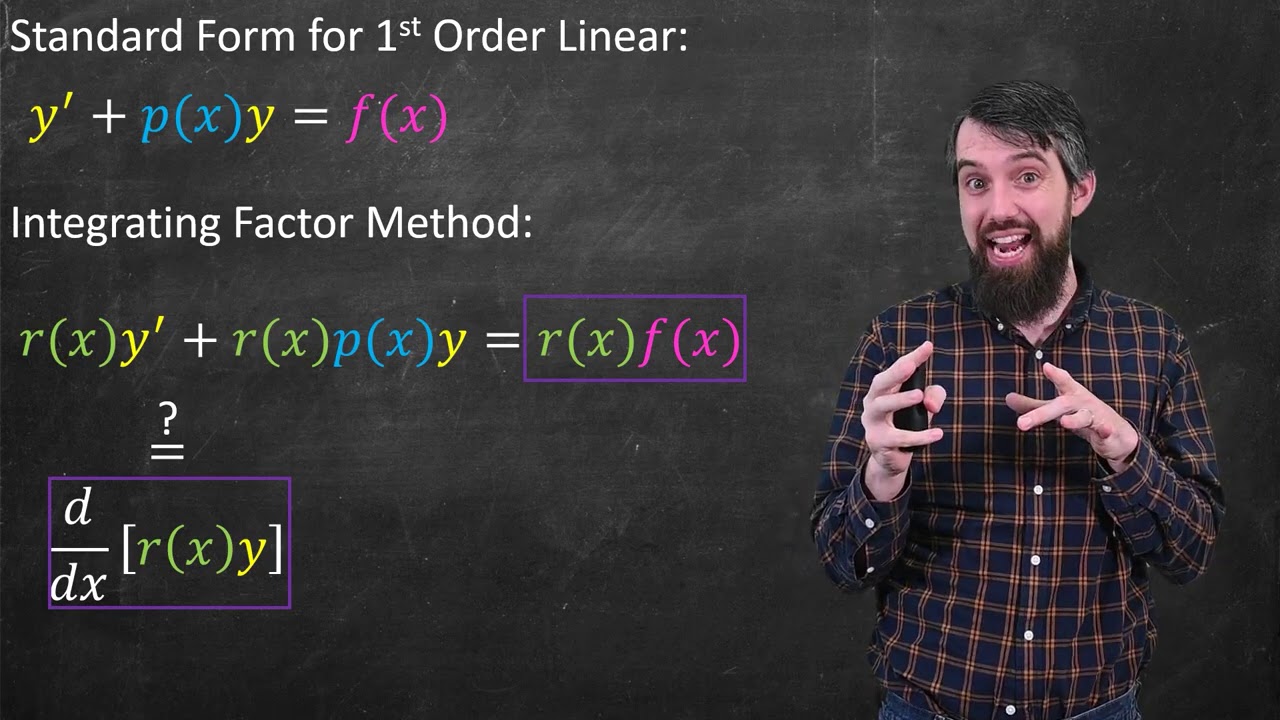

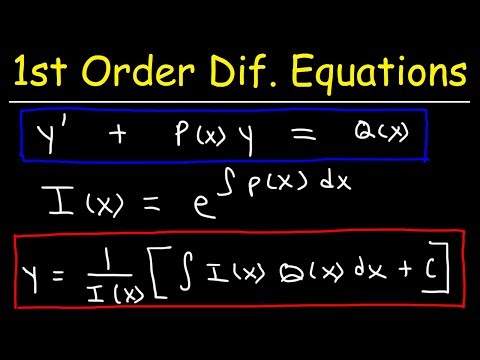

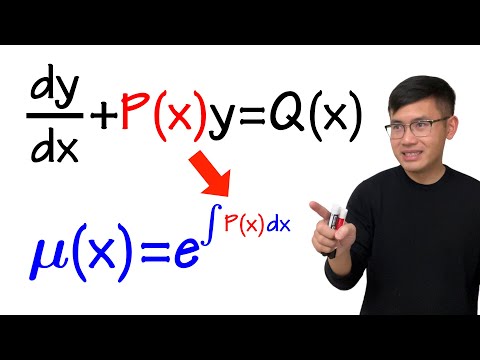

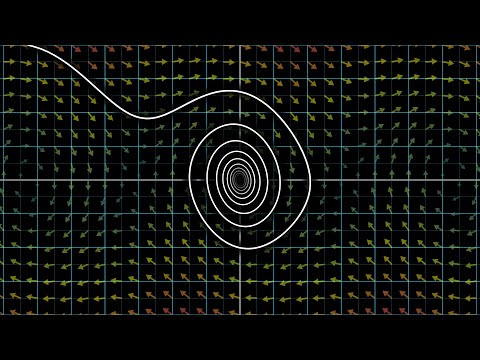

Linear first order differential equations are particularly nice because we have a method called integrating factors that lets us solve every single first order linear ODE. We will define what makes a differential equation linear, derive the formulas for the integrating factor and the solution, and then talk about the existence and uniqueness theorem that is implied by this.

0:00 Linear ODEs

4:06 Integrating Factors

10:50 Existence & Uniqueness

OTHER COURSE PLAYLISTS:

OTHER PLAYLISTS:

► Learning Math Series

►Cool Math Series:

BECOME A MEMBER:

MATH BOOKS & MERCH I LOVE:

SOCIALS:

First Order Linear Differential Equations

First Order Linear Differential Equation & Integrating Factor (introduction & example)

Linear Differential Equations & the Method of Integrating Factors

How to Solve First Order Linear Differential Equations

Introduction to Linear Differential Equations and Integrating Factors (Differential Equations 15)

Finding particular linear solution to differential equation | Khan Academy

The Method of Integrating Factors for Linear 1st Order ODEs **full example**

Difference between linear and nonlinear Differential Equation|Linear verses nonlinear DE

Differential Equation - System of Equations || B.Sc/M.Sc/CSIR-NET/GATE/IIT-JAM

Second Order Linear Differential Equations

ODE | Linear versus nonlinear

First Order Linear Differential Equations and Largest Interval over which the solution is defined

Differential equations, a tourist's guide | DE1

Learning First Order Linear DE in 30 Minutes!

Calculus 2: Linear Differential Equations (Video #14) | Math with Professor V

Solving First-Order Linear Differential Equations - Introduction with Examples

🔵15 - Linear Differential Equations: Initial Value Problems (Solving Linear First Order ODE's)...

First order, Ordinary Differential Equations.

Solving Linear Differential Equations with an Integrating Factor (Differential Equations 16)

This is why you're learning differential equations

🔵03 - Linear and Non-Linear Differential Equations: Solved Examples

Separable First Order Differential Equations - Basic Introduction

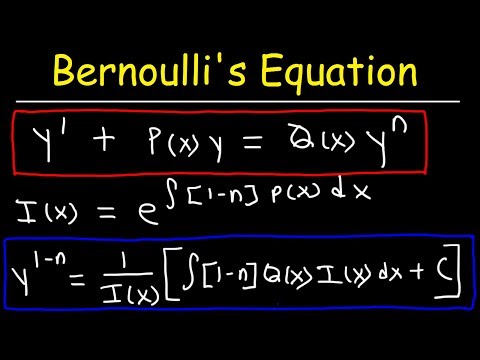

Bernoulli's Equation For Differential Equations

Differential equation introduction | First order differential equations | Khan Academy

Комментарии

0:22:28

0:22:28

0:20:34

0:20:34

0:11:36

0:11:36

0:10:53

0:10:53

1:07:16

1:07:16

0:06:30

0:06:30

0:05:07

0:05:07

0:03:29

0:03:29

1:19:42

1:19:42

0:25:17

0:25:17

0:03:26

0:03:26

0:53:25

0:53:25

0:27:16

0:27:16

0:42:08

0:42:08

0:26:39

0:26:39

0:09:26

0:09:26

0:21:16

0:21:16

0:48:35

0:48:35

1:11:01

1:11:01

0:18:36

0:18:36

0:15:01

0:15:01

0:10:42

0:10:42

0:20:03

0:20:03

0:07:49

0:07:49