filmov

tv

Best Practices Working with Billion-row Tables in Databases

Показать описание

Chapters

Intro 0:00

1. Brute Force Distributed Processing 2:30

2. Working with a Subset of table 3:35

2.1 Indexing 3:55

2.2 Partitioning 5:30

2.3 Sharding 7:30

3. Avoid it all together (reshuffle the whole design) 9:10

Summary 11:30

🎙️Listen to the Backend Engineering Podcast

🏭 Backend Engineering Videos

💾 Database Engineering Videos

🏰 Load Balancing and Proxies Videos

🏛️ Software Archtiecture Videos

📩 Messaging Systems

Become a Member

Support me on PayPal

Stay Awesome,

Hussein

Best Practices Working with Billion-row Tables in Databases

Querying 100 Billion Rows using SQL, 7 TB in a single table

SQL indexing best practices | How to make your database FASTER!

5 Secrets for making PostgreSQL run BLAZING FAST. How to improve database performance.

From 2.5 million row reads to 1 (optimizing my database performance)

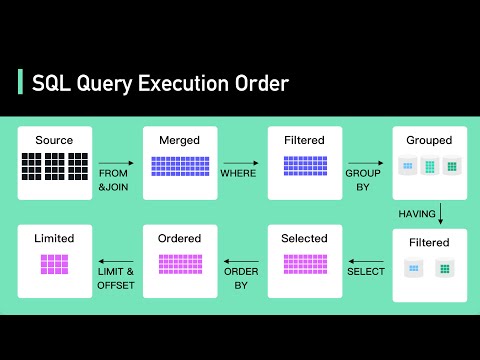

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

Update a Table with Millions of Rows in SQL (Fast)

SQL Server 2019 querying 1 trillion rows in 100 seconds

Optimizing MySQL Very Fast Loading Billion rows from Client to Server

Processing 1 Billion Rows Per Second

Try limiting rows when creating reporting for big data in Power BI

SQL Query Optimization - Tips for More Efficient Queries

10 Million Rows of data Analyzed using Excel's Data Model

STOP doing your SQUATS like this!

Very slow query performance in aws postgresql for a table with 4 billion rows

Best Practices in Designing Tables

Superpowering Tableau for Trillions of Rows

how to load one billion rows into an sql database

CIA Spy EXPLAINS Mossad’s Ruthless Tactics 🫣 | #shorts

The Strongest Muscle In Your Body 🤨 (not what you think)

How Much Does 13,000,000 YouTube Views Pay You? #shorts

5 million + random rows in less than 100 seconds using SQL

Snowflake Data Cloud 288 Billion Rows (10TB) -APOS Live Data Gateway Performance with Large DataSets

HOW TO DO A 1 ARM PUSHUP?

Комментарии

0:13:41

0:13:41

0:09:07

0:09:07

0:04:08

0:04:08

0:08:12

0:08:12

0:05:50

0:05:50

0:05:57

0:05:57

0:07:01

0:07:01

0:00:57

0:00:57

0:02:26

0:02:26

0:43:39

0:43:39

0:05:01

0:05:01

0:03:18

0:03:18

0:10:57

0:10:57

0:00:19

0:00:19

0:04:43

0:04:43

0:12:23

0:12:23

0:24:44

0:24:44

0:06:44

0:06:44

0:00:37

0:00:37

0:00:27

0:00:27

0:00:24

0:00:24

0:26:03

0:26:03

0:03:21

0:03:21

0:00:16

0:00:16