filmov

tv

Processing 1 Billion Rows Per Second

Показать описание

Everybody is talking about Big Data and about processing large amounts of data in real time or close to real time. However, to process a lot of data there is no need for commercial software or for some NoSQL stuff. PostgreSQL can do exactly what you need and process A LOT of data in real time. During our tests we have seen that crunching 1 billion rows of data in realtime is perfectly feasible, practical and definitely useful. This talk shows, which things has to be changed inside the PostgreSQL and what we learned when processing so much data for analytical purposes.

Processing 1 Billion Rows Per Second

How Fast can Python Parse 1 Billion Rows of Data?

SQL Server 2019 querying 1 trillion rows in 100 seconds

Java, How Fast Can You Parse 1 Billion Rows of Weather Data? • Roy van Rijn • GOTO 2024

I Parsed 1 Billion Rows Of Text (It Sucked)

Pushing Java to the Limits: Processing a Billion Rows in under 2 Seconds by Thomas Wuerthinger

Data Processing More Than a Billion Rows per Second

Pushing Java to the Limits: Processing a Billion Rows in under 2 Seconds by ROY VAN RIJN

Querying 100 Billion Rows using SQL, 7 TB in a single table

Firebolt presents: 42 Billion Rows of Data in 1.3 Seconds ⚡

Purely Python Code to Preview 10 Billion-Rows.csv Instantly

Run 12 ETL Commands from 10,000 Rows to 1 Billion Rows

How To Load One BILLION Rows into an SQL Database

What would you do with a billion rows?

I loaded 100,000,000 rows into MySQL (fast)

Databases: Database design for handling 1 billion rows and counting

5 Secrets for making PostgreSQL run BLAZING FAST. How to improve database performance.

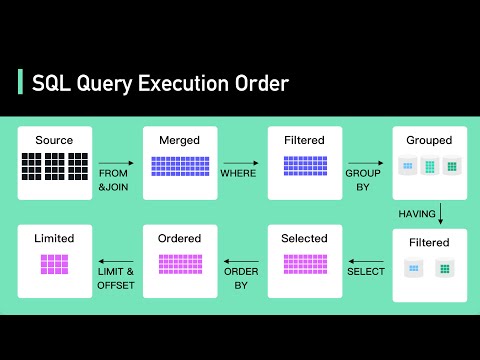

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

Strata Summit 2011: Michael Driscoll, 'One Billion Rows per Second'

How Much Memory for 1,000,000 Threads in 7 Languages | Go, Rust, C#, Elixir, Java, Node, Python

Databases: Can PostgreSQL manage a table with 1.5 billion rows?

API Platform Conference 2024 - Florian Engelhardt - Processing One Billion Rows in PHP

Inserting 10 Million Records in SQL Server with C# and ADO.NET (Efficient way)

Count Millions of Rows Fast with Materialized Views: Databases for Developers: Performance #6

Комментарии

0:43:39

0:43:39

0:16:31

0:16:31

0:00:57

0:00:57

0:42:16

0:42:16

0:39:23

0:39:23

2:57:27

2:57:27

0:47:01

0:47:01

0:49:09

0:49:09

0:09:07

0:09:07

0:01:21

0:01:21

0:04:02

0:04:02

0:12:16

0:12:16

0:12:17

0:12:17

0:18:31

0:18:31

0:18:27

0:18:27

0:03:02

0:03:02

0:08:12

0:08:12

0:05:57

0:05:57

0:09:58

0:09:58

0:26:21

0:26:21

0:01:50

0:01:50

0:33:30

0:33:30

0:06:45

0:06:45

0:12:09

0:12:09