filmov

tv

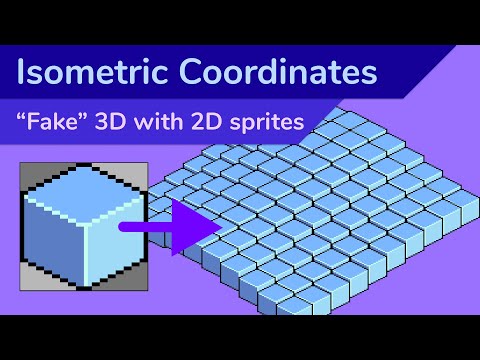

How to Make Isometric Game Assets with AI - ControlNet, Stable Diffusion + Blender Tutorial 2023

Показать описание

How to Install and Use Stable Diffusion (June 2023) - Basic Tutorial

ControlNet WebUI Extension:

ControlNet models:

Ayonimix Model (get V5 and the VAE for this tut!):

Prompt:

[your building description], cozy, playful, beautiful, art by artist, (pastel colors:0.7), vector art, (illustration:1.4), digital painting, 3d render, stylized, painting, gradients, simple design, (centered:1.5), ambient occlusion, (soft shading:0.7), view from above, angular, isometric, orthographic

Negative: cartoon, zombie, disfigured, deformed, b&w, black and white, duplicate, morbid, cropped, out of frame, clone, photoshop, tiling, cut off, patterns, borders, (frame:1.4), symmetry, intricate, signature, text, watermark, fisheye, harsh lighting, shadows

CHAPTERS:

0:00 - Intro

1:39 - Installation

4:00 - Blender

10:43 - Prompting

13:06 - Upscaling

14:36 - Cutting Out in Affinity

15:59 - Cutting Out in Photoshop

16:44 - Making Other Buildings

18:33 - Results

----------------------------------------------

Did you like this vid? Like & Subscribe to this Channel!

ControlNet WebUI Extension:

ControlNet models:

Ayonimix Model (get V5 and the VAE for this tut!):

Prompt:

[your building description], cozy, playful, beautiful, art by artist, (pastel colors:0.7), vector art, (illustration:1.4), digital painting, 3d render, stylized, painting, gradients, simple design, (centered:1.5), ambient occlusion, (soft shading:0.7), view from above, angular, isometric, orthographic

Negative: cartoon, zombie, disfigured, deformed, b&w, black and white, duplicate, morbid, cropped, out of frame, clone, photoshop, tiling, cut off, patterns, borders, (frame:1.4), symmetry, intricate, signature, text, watermark, fisheye, harsh lighting, shadows

CHAPTERS:

0:00 - Intro

1:39 - Installation

4:00 - Blender

10:43 - Prompting

13:06 - Upscaling

14:36 - Cutting Out in Affinity

15:59 - Cutting Out in Photoshop

16:44 - Making Other Buildings

18:33 - Results

----------------------------------------------

Did you like this vid? Like & Subscribe to this Channel!

Комментарии

0:06:58

0:06:58

0:05:23

0:05:23

0:08:03

0:08:03

0:11:16

0:11:16

0:07:48

0:07:48

0:10:56

0:10:56

0:13:33

0:13:33

0:06:58

0:06:58

0:46:07

0:46:07

0:00:15

0:00:15

0:16:30

0:16:30

0:25:55

0:25:55

0:01:23

0:01:23

0:08:20

0:08:20

0:22:13

0:22:13

0:22:10

0:22:10

0:00:30

0:00:30

0:03:42

0:03:42

0:01:00

0:01:00

0:04:37

0:04:37

0:10:04

0:10:04

0:08:11

0:08:11

0:19:07

0:19:07

0:20:03

0:20:03