filmov

tv

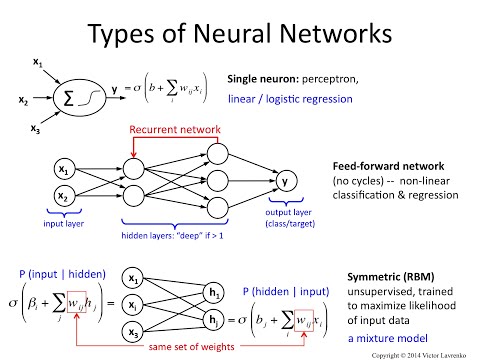

Neural Networks 5: feedforward, recurrent and RBM

Показать описание

Neural Networks 5: feedforward, recurrent and RBM

Neural Networks Explained in 5 minutes

Recurrent Neural Networks (RNNs), Clearly Explained!!!

4.1) How Feedforward differs from Recurrent Neural Network?

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

Recurrent Neural Networks - Ep. 9 (Deep Learning SIMPLIFIED)

Feedforward Neural Network

ANN vs CNN vs RNN | Difference Between ANN CNN and RNN | Types of Neural Networks Explained

CSC401 2511 W24 L6 Machine Translation (2 of 3) 5 Feb 2024

Feedforward Neural Network | Lecture 5 | Deep Learning

What are Convolutional Neural Networks (CNNs)?

Recurrent Neural Networks | RNN LSTM Tutorial | Why use RNN | On Whiteboard | Compare ANN, CNN, RNN

How Feed Forward Neural Network works

15. Feed Forward Neural Networks vs. RNN - Quick Session

But what is a neural network? | Chapter 1, Deep learning

MIT 6.S191: Recurrent Neural Networks, Transformers, and Attention

MIT 6.S191 (2023): Recurrent Neural Networks, Transformers, and Attention

Illustrated Guide to Recurrent Neural Networks: Understanding the Intuition

The Essential Main Ideas of Neural Networks

Why Transformer over Recurrent Neural Networks

Feed Forward Network In Artificial Neural Network Explained In Hindi

Feedforward Neural Network Basics

Backpropagation in Neural Networks | Back Propagation Algorithm with Examples | Simplilearn

Feedforward neural network in PyTorch

Комментарии

0:04:56

0:04:56

0:04:32

0:04:32

0:16:37

0:16:37

0:01:07

0:01:07

0:05:45

0:05:45

0:05:21

0:05:21

0:08:28

0:08:28

0:05:39

0:05:39

0:50:01

0:50:01

1:29:34

1:29:34

0:06:21

0:06:21

0:22:21

0:22:21

0:00:22

0:00:22

0:03:08

0:03:08

0:18:40

0:18:40

1:01:31

1:01:31

1:02:50

1:02:50

0:09:51

0:09:51

0:18:54

0:18:54

0:01:00

0:01:00

0:03:54

0:03:54

0:04:45

0:04:45

0:06:48

0:06:48

0:11:36

0:11:36