filmov

tv

02 How Spark Works - Driver & Executors | How Spark divide Job in Stages | What is Shuffle in Spark

Показать описание

Video explains - How Spark works ? What are Driver and Executors ? How Spark divides JOB in Stages and Tasks ? How Spark Processes data in parallel ? What are Cores and Tasks ? What is data Shuffle ?

Chapters

00:00 - Introduction

00:25 - How Spark Works ?

01:47 - How Spark divide Job in Stages ?

02:06 - What is Shuffle ?

03:01 - What is Driver ?

03:30 - What are Executors ?

04:02 - Understand complete Workflow

The series provides a step-by-step guide to learning PySpark, a popular open-source distributed computing framework that is used for big data processing.

New video in every 3 days ❤️

#spark #pyspark #python #dataengineering

Chapters

00:00 - Introduction

00:25 - How Spark Works ?

01:47 - How Spark divide Job in Stages ?

02:06 - What is Shuffle ?

03:01 - What is Driver ?

03:30 - What are Executors ?

04:02 - Understand complete Workflow

The series provides a step-by-step guide to learning PySpark, a popular open-source distributed computing framework that is used for big data processing.

New video in every 3 days ❤️

#spark #pyspark #python #dataengineering

02 How Spark Works - Driver & Executors | How Spark divide Job in Stages | What is Shuffle in Sp...

02 How Spark Streaming Works

What exactly is Apache Spark? | Big Data Tools

Learn Apache Spark in 10 Minutes | Step by Step Guide

Spark architecture explained!!🔥

Spark Architecture in 3 minutes| Spark components | How spark works

Working with different Sources and Sinks | Building our 2nd Spark Streaming Application

CRANKSHAFT TIMING TRICK

Why Hard Work Always Gets Noticed

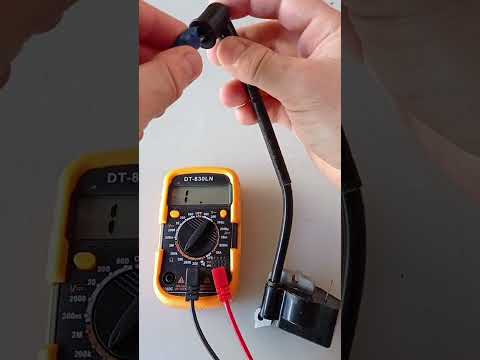

how to test ignition coil

O2 sensor anti fouler fix

Discover if Spark Plug Non-Foulers Actually Work and Why!

Vehicle A/C not working? Check this pressure switch first! #yukon #automobile #shorts

Experienced Driver on Tight Parking 🤠😎😎 #Shorts

Engine Doesn't Start: Check These FUSES #Shorts #Engine #DoesNotStart #Fuses

Easiest Way to Test your Starter

Symptoms of a bad alternator p1 #diy

The Easiest Way To Test Your Relay - Don't Miss This!

Apache Spark: Cluster Computing with Working Sets

Here’s Why Your Car Shuts Off Randomly

How to fix a p0420 without replacing catalytic converter

Crankshaft Position Sensor Location

If your fuel pump leaves you stranded...try this Quick Tip!

If you discover this in your Throttle Body you have problems

Комментарии

0:04:47

0:04:47

0:06:46

0:06:46

0:04:37

0:04:37

0:10:47

0:10:47

0:04:11

0:04:11

0:05:58

0:05:58

0:15:26

0:15:26

0:00:38

0:00:38

0:00:34

0:00:34

0:00:21

0:00:21

0:01:00

0:01:00

0:05:28

0:05:28

0:00:13

0:00:13

0:00:29

0:00:29

0:00:22

0:00:22

0:00:48

0:00:48

0:00:22

0:00:22

0:00:32

0:00:32

0:11:15

0:11:15

0:00:47

0:00:47

0:00:16

0:00:16

0:00:16

0:00:16

0:00:27

0:00:27

0:00:26

0:00:26