filmov

tv

Kappa - SPSS (part 1)

Показать описание

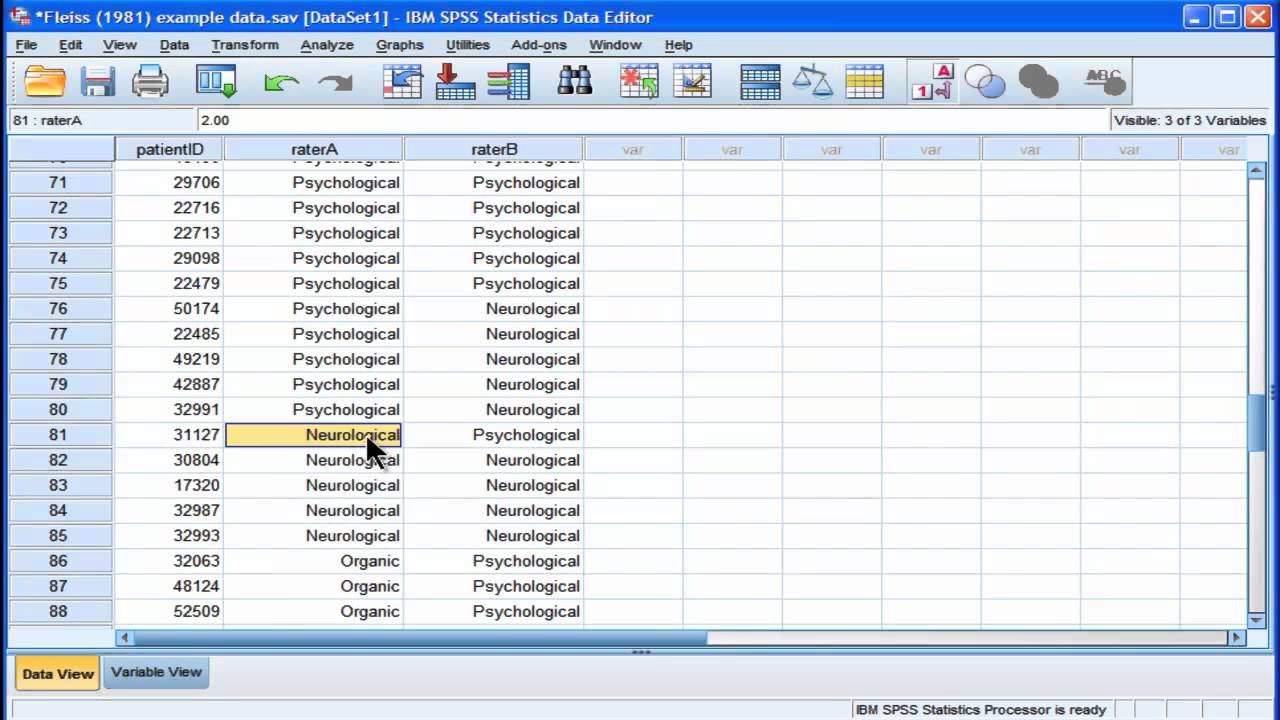

I demonstrate how to perform and interpret a Kappa analysis (a.k.a., Cohen's Kappa) in SPSS. I also demonstrate the usefulness of Kappa in contrast to the more intuitive and simple approach of simply calculating the percentage of agreement between two raters.

Kappa - SPSS (part 1)

Kappa Measure of Agreement in SPSS

Estimating Inter-Rater Reliability with Cohen's Kappa in SPSS

SPSS Tutorial: Cohen's Kappa

Cohen’s Kappa in SPSS.

How to Use SPSS-Kappa Measure of Agreement

Fleiss Kappa [Simply Explained]

StatHand - Interpreting Cohen's kappa in SPSS

How to calculate Kappa Statistic? Where to use Kappa? How to interpret and calculate in SPSS?

Installing kappa in SPSS 23

Cohen's Kappa on SPSS - By Rachel Bromnick

Inter-Rater Reliability with Cohen's Kappa in SPSS

Fleiss' Kappa in SPSS (Version 26 und später) berechnen - Daten analysieren in SPSS (116)

Interrater reliability test using KAPPA statistic with SPSS ✅💯

Cohen's Kappa (Inter-Rater-Reliability)

SPSS Tutorial: Inter and Intra rater reliability (Cohen's Kappa, ICC)

Cohens Kappa in SPSS berechnen - Daten analysieren in SPSS (70)

Fleiss' Kappa in SPSS (Version 25 und früher) berechnen - Daten analysieren in SPSS (71)

16- How to Use SPSS Calculating sensitivity%, specificity% (part 1)

Inter rater reliability using Fleiss Kappa

Determining Inter-Rater Reliability with the Intraclass Correlation Coefficient in SPSS

Kappa Coefficient

StatHand - Calculating Cohen's kappa in SPSS

Konfidenzintervalle für Cohens Kappa in SPSS berechnen (SPSS 26 und älter)

Комментарии

0:03:34

0:03:34

0:04:42

0:04:42

0:07:35

0:07:35

0:11:26

0:11:26

0:02:57

0:02:57

0:07:38

0:07:38

0:09:45

0:09:45

0:00:42

0:00:42

0:05:55

0:05:55

0:01:29

0:01:29

0:13:47

0:13:47

0:02:57

0:02:57

0:03:28

0:03:28

0:08:30

0:08:30

0:11:05

0:11:05

0:22:41

0:22:41

0:04:37

0:04:37

0:04:07

0:04:07

0:18:38

0:18:38

0:07:24

0:07:24

0:08:08

0:08:08

0:04:29

0:04:29

0:01:19

0:01:19

0:04:14

0:04:14