filmov

tv

SPSS Tutorial: Inter and Intra rater reliability (Cohen's Kappa, ICC)

Показать описание

*Sorry for the sketchy resolution quality of the SPSS calculations.

Interpretation reference: Portney LG & Watkins MP (2000) Foundations of clinical research Applications to practice. Prentice Hall Inc. New Jersey ISBN 0-8385-2695-0 p 560-567

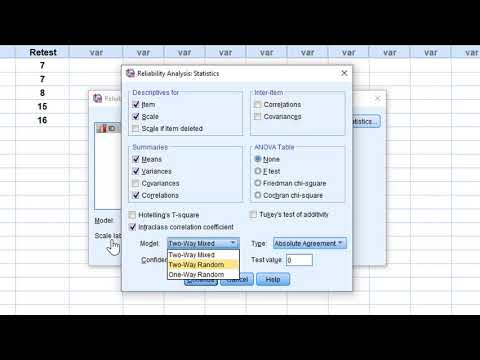

In this video I discuss the concepts and assumptions of two different reliability (agreement) statistics: Cohen's Kappa (for 2 raters using categorical data) and the Intra-class correlation coefficient (for 2 or more raters using continuous data). I also show how to calculate the Confidence Interval Limits for Kappa and the Standard Error of Measurement (SEM) for ICC.

Interpretation reference: Portney LG & Watkins MP (2000) Foundations of clinical research Applications to practice. Prentice Hall Inc. New Jersey ISBN 0-8385-2695-0 p 560-567

In this video I discuss the concepts and assumptions of two different reliability (agreement) statistics: Cohen's Kappa (for 2 raters using categorical data) and the Intra-class correlation coefficient (for 2 or more raters using continuous data). I also show how to calculate the Confidence Interval Limits for Kappa and the Standard Error of Measurement (SEM) for ICC.

SPSS Tutorial: Inter and Intra rater reliability (Cohen's Kappa, ICC)

Determining Inter-Rater Reliability with the Intraclass Correlation Coefficient in SPSS

How to Use SPSS: Intra Class Correlation Coefficient

Intraklassenkorrelationskoeffizient in SPSS berechnen - Daten analysieren in SPSS (72)

Inter rater reliability using SPSS

Reliability using ICC in SPSS

Intraclass Correlations

Test-retest and interrater reliability on SPSS

Selecting Raters using the Intraclass Correlation Coefficient in SPSS

StatHand - Interpreting an intraclass correlation coefficient for inter-rater reliability in SPSS

StatHand - Calculating an intraclass correlation coefficient for inter-rater reliability in SPSS

Inter-Rater Reliability with Cohen's Kappa in SPSS

How to use SPSS for ICC calculation

StatHand - Interpreting an intraclass correlation coefficient for test-retest reliability in SPSS

Estimating Inter-Rater Reliability with Cohen's Kappa in SPSS

StatHand - Calculating an intraclass correlation coefficient for test-retest reliability in SPSS

Kendall's Coefficient of Concordance (Kendall's W) in SPSS

Kappa Measure of Agreement in SPSS

Intraclass correlation

Reliability 4: Cohen's Kappa and inter-rater agreement

Intraclass Correlation 2

Interrater reliability test using KAPPA statistic with SPSS ✅💯

Tutorial Uji Reliabilitas Korelasi Intra Kelas (Inter-Class Correlation (ICC)) Menggunakan SPSS 23

Split-Half Reliability and the Spearman-Brown Coefficient using SPSS

Комментарии

0:22:41

0:22:41

0:08:08

0:08:08

0:07:16

0:07:16

0:07:44

0:07:44

0:03:46

0:03:46

0:05:20

0:05:20

0:07:31

0:07:31

0:04:15

0:04:15

0:10:40

0:10:40

0:01:10

0:01:10

0:01:22

0:01:22

0:02:57

0:02:57

0:04:17

0:04:17

0:01:13

0:01:13

0:07:35

0:07:35

0:01:13

0:01:13

0:07:48

0:07:48

0:04:42

0:04:42

0:02:08

0:02:08

0:18:25

0:18:25

0:14:04

0:14:04

0:08:30

0:08:30

0:14:10

0:14:10

0:11:14

0:11:14