filmov

tv

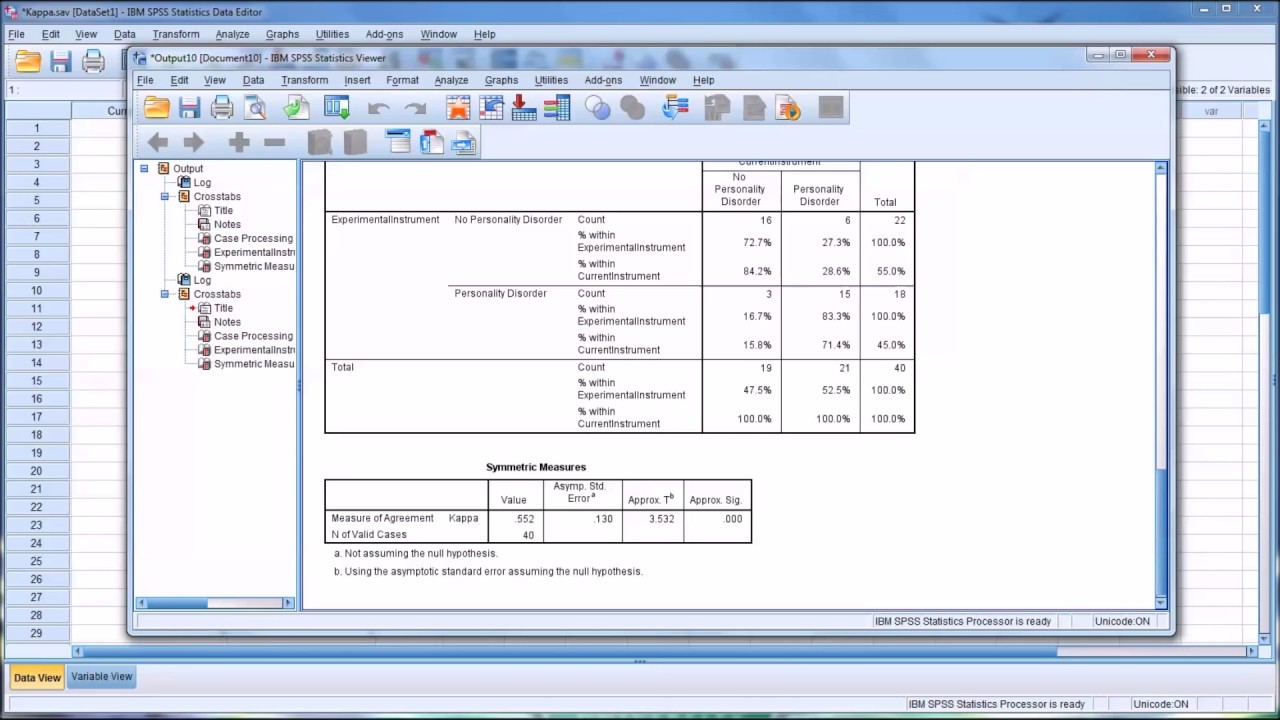

Estimating Inter-Rater Reliability with Cohen's Kappa in SPSS

Показать описание

This video demonstrates how to estimate inter-rater reliability with Cohen’s Kappa in SPSS. Calculating sensitivity and specificity is reviewed.

Estimating Inter-Rater Reliability with Cohen's Kappa in SPSS

Cohen's Kappa (Inter-Rater-Reliability)

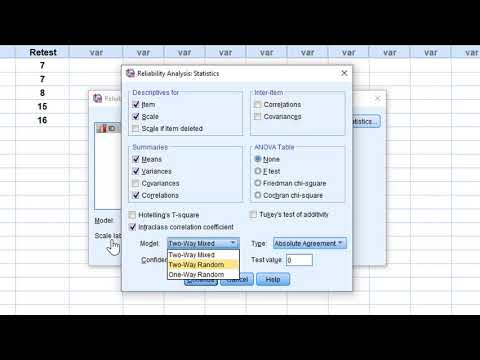

Determining Inter-Rater Reliability with the Intraclass Correlation Coefficient in SPSS

Reliability 4: Cohen's Kappa and inter-rater agreement

Kappa Value Calculation | Reliability

Weighted Cohen's Kappa (Inter-Rater-Reliability)

Calculating and Interpreting Cohen's Kappa in Excel

Inter-Rater Reliability with Cohen's Kappa in SPSS

Inter rater reliability using Fleiss Kappa

SPSS Tutorial: Inter and Intra rater reliability (Cohen's Kappa, ICC)

Kappa Measure of Agreement in SPSS

How Do I Quantify Inter-Rater Reliability? : Qualitative Research Methods

Cohens Kappa (Inter-Rater-Reliabilität)

Inter rater reliability using SPSS

Inter-rater reliability analysis using McNemar test and Cohen's Kappa in SPSS

Test-retest and interrater reliability on SPSS

Ordinal data: Krippendorff alpha inter-rater reliability test

Cohen's Kappa: Inter-rater Agreement Score for Categorical Items

Kappa coefficient: Measures of Reliability | Cohen's Kappa | Test of Reliability, 2 Raters

How to calculate Kappa Statistic? Where to use Kappa? How to interpret and calculate in SPSS?

Inter-rater Reliability using Cohens and Weighted Kappa and Intra Class Correlation Coefficient

Dr. Eli Lieber Discusses Cohen's Kappa | Training Center

Reliability using ICC in SPSS

Using AgreeStat 360 to analyze your inter-rater reliability data. Compute Cohen's Kappa, Gwet A...

Комментарии

0:07:35

0:07:35

0:11:05

0:11:05

0:08:08

0:08:08

0:18:25

0:18:25

0:03:29

0:03:29

0:11:56

0:11:56

0:11:23

0:11:23

0:02:57

0:02:57

0:07:24

0:07:24

0:22:41

0:22:41

0:04:42

0:04:42

0:05:00

0:05:00

0:11:29

0:11:29

0:03:46

0:03:46

0:12:00

0:12:00

0:04:15

0:04:15

0:04:27

0:04:27

0:03:14

0:03:14

0:05:09

0:05:09

0:05:55

0:05:55

0:12:37

0:12:37

0:05:47

0:05:47

0:05:20

0:05:20

0:01:24

0:01:24