filmov

tv

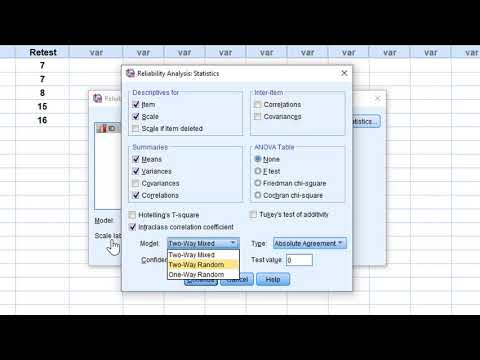

Determining Inter-Rater Reliability with the Intraclass Correlation Coefficient in SPSS

Показать описание

This video demonstrates how to determine inter-rater reliability with the intraclass correlation coefficient (ICC) in SPSS. Interpretation of the ICC as an estimate of inter-rater reliability is reviewed.

Determining Inter-Rater Reliability with the Intraclass Correlation Coefficient in SPSS

Cohen's Kappa (Inter-Rater-Reliability)

Estimating Inter-Rater Reliability with Cohen's Kappa in SPSS

How Do I Quantify Inter-Rater Reliability? : Qualitative Research Methods

SPSS Tutorial: Inter and Intra rater reliability (Cohen's Kappa, ICC)

Kappa Value Calculation | Reliability

What is Inter-Rater Reliability? : Qualitative Research Methods

Inter-rater reliability - Intro to Psychology

Common Issues With Inter-Rater Reliability: Qualitative Research Methods

Inter rater reliability using SPSS

Reliability 4: Cohen's Kappa and inter-rater agreement

Inter-Rater Reliability Method (Part 11 of the Course) | www.pietutors.com

Interrater Reliability with MINT

Inter-Rater Reliability

Ordinal data: Krippendorff alpha inter-rater reliability test

inter rater reliability

Inter-Rater Reliability Vocabulary

3 Types of Reliability (Test-Retest, Interrater, Internal)- Psychology Research Methods

Screening Studies and Inter-Rater Reliability - Straight to the Point (brief lecture)

Inter rater reliability using Fleiss Kappa

Test-retest and interrater reliability on SPSS

Fleiss Kappa [Simply Explained]

Inter-rater Reliability- Consistent Evaluation in a Clinical Environment

StatHand - Calculating an intraclass correlation coefficient for inter-rater reliability in SPSS

Комментарии

0:08:08

0:08:08

0:11:05

0:11:05

0:07:35

0:07:35

0:05:00

0:05:00

0:22:41

0:22:41

0:03:29

0:03:29

0:03:31

0:03:31

0:01:08

0:01:08

0:03:52

0:03:52

0:03:46

0:03:46

0:18:25

0:18:25

0:01:20

0:01:20

0:16:42

0:16:42

0:00:15

0:00:15

0:04:27

0:04:27

0:01:58

0:01:58

0:00:22

0:00:22

0:07:05

0:07:05

0:16:12

0:16:12

0:07:24

0:07:24

0:04:15

0:04:15

0:09:45

0:09:45

0:00:33

0:00:33

0:01:22

0:01:22