filmov

tv

Sympathy For The Devil (Linear Phase for Crossovers and Oversampling)

Показать описание

In which I clarify the seeming contradiction in my last video, and explain why I typically go with linear phase crossovers and oversampling filters, despite the diabolical ringing.

Affiliate links: if you make a purchase using one of the links below I'll get a small commission. You won't pay any extra.

FabFilter Pro-MB (Gear4music)

FabFilter Pro-Q3 (Gear4music)

FabFilter Volcano3 (Gear4music)

FabFilter Saturn2 (Gear4music)

Video edited with VEGAS Pro 17:

{affiliate link)

Affiliate links: if you make a purchase using one of the links below I'll get a small commission. You won't pay any extra.

FabFilter Pro-MB (Gear4music)

FabFilter Pro-Q3 (Gear4music)

FabFilter Volcano3 (Gear4music)

FabFilter Saturn2 (Gear4music)

Video edited with VEGAS Pro 17:

{affiliate link)

Sympathy For The Devil (Linear Phase for Crossovers and Oversampling)

Sympathy for the devil - uke cover

Oversample Everything! Reaper FX and FX Chain Oversampling

SYMPATHY FOR THE DEVIL cover acoustic remake The Rolling Stones tribute James Graff NJ USA Mart E.U.

Particle physicists make wishlist. I'm underwhelmed.

Get Back In Line

How Diverse Can Your Music Be Before It Hurts Your Promotions?

The Doors, The End - A Classical Musician’s First Listen and Reaction

Stryper - Yahweh

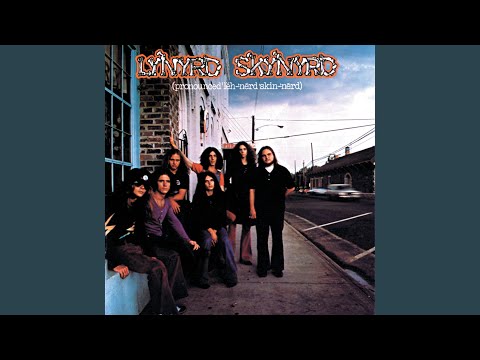

Free Bird

Konka - War With The Devil (DDD080)

Golden Earring - Radar Love (Official Music Video [HD])

In Pursuit of Poetry: A Discovery - Geoffrey Dunn WebinART

Comedian Simon Evans Learns the Life-Changing Truth About His Dad

STS9-Sympathy for the Devil& When The Dust Settles

Magic Death Eye Compressors

E diela shqiptare - Shihemi në gjyq! (11 mars 2018)

Aansplaining by Karthik Kumar - Full Show | Karthik Kumar

CLF 2023: THE WORLD Simon Sebag Montefiore with Kate Bennett

Solo Over A Synth #drums #drummer #drummers #drumming #drumlife #drummerlife #drumlessons #epic

NAR: Kenneth Hagin and T. L. Osborn - Episode 48 William Branham Historical ResearchResearch Podcast

What did we do to Justin Bieber?

Tramp Stamp Title Sequence

Editing Pat Garrett and Billy the Kid

Комментарии

0:20:29

0:20:29

0:04:18

0:04:18

0:24:31

0:24:31

0:05:35

0:05:35

0:09:48

0:09:48

0:03:36

0:03:36

0:00:51

0:00:51

0:56:04

0:56:04

0:06:23

0:06:23

0:09:08

0:09:08

0:04:42

0:04:42

0:05:03

0:05:03

1:02:44

1:02:44

1:18:22

1:18:22

0:14:13

0:14:13

0:20:24

0:20:24

0:44:37

0:44:37

1:33:36

1:33:36

0:57:55

0:57:55

0:00:58

0:00:58

0:55:31

0:55:31

0:34:18

0:34:18

0:00:55

0:00:55

0:00:59

0:00:59