filmov

tv

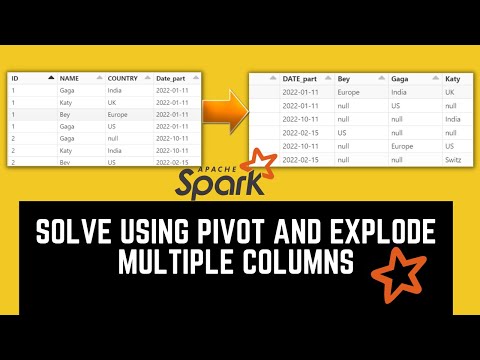

How to Explode and Pivot an Array Column in Databricks SQL

Показать описание

Learn how to efficiently `explode` array columns and dynamically `pivot` them into separate columns using Databricks SQL with a step-by-step guide.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: I need a databricks sql query to explode an array column and then pivot into dynamic number of columns based on the number of values in the array

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Introduction

Working with JSON data in Apache Spark, especially on Databricks, can sometimes be challenging. One common scenario is when you have columns representing lists (arrays of values) and you want to break them down into individual columns. This is often referred to as exploding the array and pivoting it into a dynamic table format.

In this post, we will understand how to take an array column from JSON data, explode its values, and then pivot these into separate columns based on the number of elements in the array.

Problem Statement

Imagine you have JSON data with a location column containing arrays, like the following:

["USA", "China", "India", "UK"]

["Nepal", "China", "India", "UK", "Japan"]

We want to convert this into a format where each unique position of these array elements corresponds to separate columns in the final output table.

Proposed Solution

Here’s a step-by-step breakdown of how to achieve this. We'll use Scala with Spark SQL, which is a powerful way to manipulate and analyze big data efficiently.

Step 1: Set Up Your Data

First, you need to create a DataFrame using the Spark SQL capabilities. Below is how to set it up:

[[See Video to Reveal this Text or Code Snippet]]

Step 2: Determine Maximum Array Size

Before exploding and pivoting, you need to find out the maximum size of the arrays in your DataFrame. This helps us create dynamic column names according to the largest array length.

[[See Video to Reveal this Text or Code Snippet]]

Step 3: Convert Array to Columns

With the schema established, employ the from_csv function along with the created schema to expand the array elements into separate columns.

[[See Video to Reveal this Text or Code Snippet]]

Expected Output

After running the above code, you'll achieve a table that looks like this:

locationloc_1loc_2loc_3loc_4[China, India, UK]ChinaIndiaUKNULL[China, India, UK, Japan]ChinaIndiaUKJapanConclusion

By following these steps, you can effectively explode and pivot array columns in Databricks SQL, transforming complex JSON data into a cleaner tabular format. This method is not just limited to location data but can be adapted for any similar structure, enhancing your data processing capabilities.

Don't hesitate to implement these steps in your Databricks environment and experience the change in how you handle array data!

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: I need a databricks sql query to explode an array column and then pivot into dynamic number of columns based on the number of values in the array

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

Introduction

Working with JSON data in Apache Spark, especially on Databricks, can sometimes be challenging. One common scenario is when you have columns representing lists (arrays of values) and you want to break them down into individual columns. This is often referred to as exploding the array and pivoting it into a dynamic table format.

In this post, we will understand how to take an array column from JSON data, explode its values, and then pivot these into separate columns based on the number of elements in the array.

Problem Statement

Imagine you have JSON data with a location column containing arrays, like the following:

["USA", "China", "India", "UK"]

["Nepal", "China", "India", "UK", "Japan"]

We want to convert this into a format where each unique position of these array elements corresponds to separate columns in the final output table.

Proposed Solution

Here’s a step-by-step breakdown of how to achieve this. We'll use Scala with Spark SQL, which is a powerful way to manipulate and analyze big data efficiently.

Step 1: Set Up Your Data

First, you need to create a DataFrame using the Spark SQL capabilities. Below is how to set it up:

[[See Video to Reveal this Text or Code Snippet]]

Step 2: Determine Maximum Array Size

Before exploding and pivoting, you need to find out the maximum size of the arrays in your DataFrame. This helps us create dynamic column names according to the largest array length.

[[See Video to Reveal this Text or Code Snippet]]

Step 3: Convert Array to Columns

With the schema established, employ the from_csv function along with the created schema to expand the array elements into separate columns.

[[See Video to Reveal this Text or Code Snippet]]

Expected Output

After running the above code, you'll achieve a table that looks like this:

locationloc_1loc_2loc_3loc_4[China, India, UK]ChinaIndiaUKNULL[China, India, UK, Japan]ChinaIndiaUKJapanConclusion

By following these steps, you can effectively explode and pivot array columns in Databricks SQL, transforming complex JSON data into a cleaner tabular format. This method is not just limited to location data but can be adapted for any similar structure, enhancing your data processing capabilities.

Don't hesitate to implement these steps in your Databricks environment and experience the change in how you handle array data!

0:14:50

0:14:50

0:01:34

0:01:34

0:18:03

0:18:03

0:12:49

0:12:49

0:13:11

0:13:11

0:01:43

0:01:43

0:02:55

0:02:55

0:00:26

0:00:26

0:00:09

0:00:09

0:00:51

0:00:51

0:00:15

0:00:15

0:00:18

0:00:18

0:01:41

0:01:41

0:00:13

0:00:13

0:03:58

0:03:58

0:00:12

0:00:12

0:00:57

0:00:57

0:02:53

0:02:53

0:00:41

0:00:41

0:01:44

0:01:44

0:00:46

0:00:46

0:00:24

0:00:24

0:00:14

0:00:14

0:00:21

0:00:21