filmov

tv

14. explode(), split(), array() & array_contains() functions in PySpark | #PySpark #azuredatabricks

Показать описание

In this video, I explained about explode() , split(), array() & array_contains() functions usages with ArrayType column in PySpark.

Link for PySpark Playlist:

Link for PySpark Real Time Scenarios Playlist:

Link for Azure Synapse Analytics Playlist:

Link to Azure Synapse Real Time scenarios Playlist:

Link for Azure Data bricks Play list:

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

Link for Azure Data Factory Real time Scenarios

Link for Azure Logic Apps playlist

#PySpark #Spark #databricks #azuresynapse #synapse #notebook #azuredatabricks #PySparkcode #dataframe #WafaStudies #maheer #azure

Link for PySpark Playlist:

Link for PySpark Real Time Scenarios Playlist:

Link for Azure Synapse Analytics Playlist:

Link to Azure Synapse Real Time scenarios Playlist:

Link for Azure Data bricks Play list:

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

Link for Azure Data Factory Real time Scenarios

Link for Azure Logic Apps playlist

#PySpark #Spark #databricks #azuresynapse #synapse #notebook #azuredatabricks #PySparkcode #dataframe #WafaStudies #maheer #azure

14. explode(), split(), array() & array_contains() functions in PySpark | #PySpark #azuredatabri...

79. Databricks | Pyspark | Split Array Elements into Separate Columns

NumPy - #14 - Array Splitting

Split Array Largest Sum - Leetcode 410 - Python

How to Split a String and get Last Array Element in JavaScript

Split Array in Parts

javascript - Split array into chunks

Method split | Convert String to Array.

Split Comma-separated String into Array in Java #shorts #java #programming #coding

#task14 Use split method an array numpy in python | Python Tutorial For Beginners #Shaheencodingzone

Interesting Behaviour of split ( ) function and Array - Java Program

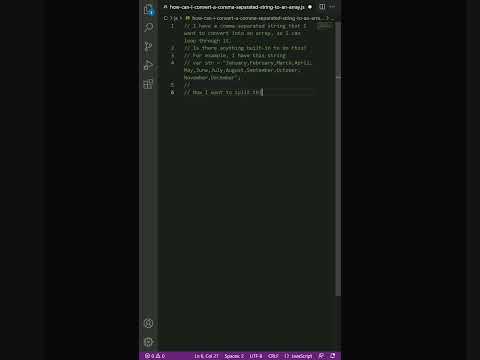

javascript - How can I convert a comma-separated string to an array?

How to Split String into String Array using Character in C#

How to Split String into Array #java

convert array into string #javascript #shorts

Program to split NUMPY ARRAY into 4 equal SUBARRAY in Python 😎🆒😎😎

Functional Programming Split a String into an Array Using the split Method freeCodeCamp19/24

SPLITTING ARRAY (SPLIT( ),ARRAY_SPLIT( ),VSPLIT( ),HSPLIT( )) IN NUMPY - PYTHON PROGRAMMING

Array : Split String in clojure and then print

Find Largest String from an Array

Java - Split a string into an array | Arrays in Java

Split a String into an Array Using the split Method | Functional Programming | freeCodeCamp

Split a String into an array in Swift?

webMethods Java Service to split string into array of characters

Комментарии

0:18:03

0:18:03

0:09:56

0:09:56

0:06:40

0:06:40

0:16:51

0:16:51

0:01:31

0:01:31

0:03:48

0:03:48

0:00:36

0:00:36

0:00:19

0:00:19

0:00:13

0:00:13

0:04:23

0:04:23

0:00:44

0:00:44

0:00:30

0:00:30

0:03:58

0:03:58

0:00:36

0:00:36

0:00:32

0:00:32

0:00:11

0:00:11

0:00:29

0:00:29

0:13:05

0:13:05

0:00:59

0:00:59

0:00:54

0:00:54

0:05:37

0:05:37

0:04:29

0:04:29

0:03:14

0:03:14

0:02:48

0:02:48