filmov

tv

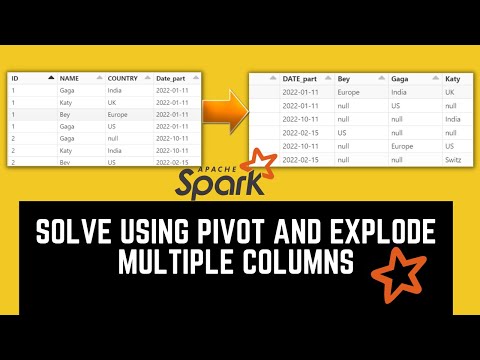

How to Explode Column Values into Multiple Columns in PySpark

Показать описание

Learn how to efficiently unpivot and explode column values into multiple columns in PySpark using DataFrame transformations.

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Explode column values into multiple columns in pyspark

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

A Guide to Exploding Column Values in PySpark

Working with data in PySpark can sometimes present challenges, especially when dealing with nested data structures. One common problem is when you need to explode column values from an array into multiple separate columns. In this article, we’ll walk through a straightforward solution for achieving this, using a practical example.

The Problem: DataFrame with Nested Arrays

Imagine you have a PySpark DataFrame structured as follows:

[[See Video to Reveal this Text or Code Snippet]]

In this DataFrame, the substitutes column contains nested arrays where each entry represents a substitute item’s identifier and its corresponding value. Your goal is to transform this structure into multiple columns, creating separate columns for each substitute.

The Solution: Exploding and Pivoting the DataFrame

To unpivot and explode the array effectively, you can follow these steps:

Step 1: Create an Identifier Column

First, you need a unique identifier to help distinguish each row. You can utilize the monotonically_increasing_id() function in PySpark to generate this ID:

[[See Video to Reveal this Text or Code Snippet]]

This will add a new column, id, that uniquely identifies each row in the DataFrame.

Step 2: Explode the substitutes Column

Now, we’ll explode the substitutes column. This operation will flatten the nested arrays into multiple rows, allowing us to work with each substitute individually.

[[See Video to Reveal this Text or Code Snippet]]

Step 3: Extract Column Values

Next, from the exploded data, extract the two parts of each substitute into new columns: one for the dynamic column name (substitutes_1, substitutes_2, etc.) and one for the values.

[[See Video to Reveal this Text or Code Snippet]]

Step 4: Group and Pivot the DataFrame

With the extracted columns, you can now group by the id and pivot on the new column names, thus aggregating the values:

[[See Video to Reveal this Text or Code Snippet]]

Step 5: Drop the ID Column

Finally, since the ID column served its purpose, you can drop it from the final DataFrame to tidy up your data:

[[See Video to Reveal this Text or Code Snippet]]

Final Result

By following these steps, you will obtain a DataFrame structured as desired:

[[See Video to Reveal this Text or Code Snippet]]

Conclusion

By following the outlined steps, you can efficiently explode column values in PySpark, transforming nested data structures into a more manageable and analytically useful format. This technique not only enhances data processing in PySpark but also enables better data analysis and reporting.

Feel free to experiment with this approach on your own DataFrames, and enhance your data manipulation skills in PySpark!

---

Visit these links for original content and any more details, such as alternate solutions, latest updates/developments on topic, comments, revision history etc. For example, the original title of the Question was: Explode column values into multiple columns in pyspark

If anything seems off to you, please feel free to write me at vlogize [AT] gmail [DOT] com.

---

A Guide to Exploding Column Values in PySpark

Working with data in PySpark can sometimes present challenges, especially when dealing with nested data structures. One common problem is when you need to explode column values from an array into multiple separate columns. In this article, we’ll walk through a straightforward solution for achieving this, using a practical example.

The Problem: DataFrame with Nested Arrays

Imagine you have a PySpark DataFrame structured as follows:

[[See Video to Reveal this Text or Code Snippet]]

In this DataFrame, the substitutes column contains nested arrays where each entry represents a substitute item’s identifier and its corresponding value. Your goal is to transform this structure into multiple columns, creating separate columns for each substitute.

The Solution: Exploding and Pivoting the DataFrame

To unpivot and explode the array effectively, you can follow these steps:

Step 1: Create an Identifier Column

First, you need a unique identifier to help distinguish each row. You can utilize the monotonically_increasing_id() function in PySpark to generate this ID:

[[See Video to Reveal this Text or Code Snippet]]

This will add a new column, id, that uniquely identifies each row in the DataFrame.

Step 2: Explode the substitutes Column

Now, we’ll explode the substitutes column. This operation will flatten the nested arrays into multiple rows, allowing us to work with each substitute individually.

[[See Video to Reveal this Text or Code Snippet]]

Step 3: Extract Column Values

Next, from the exploded data, extract the two parts of each substitute into new columns: one for the dynamic column name (substitutes_1, substitutes_2, etc.) and one for the values.

[[See Video to Reveal this Text or Code Snippet]]

Step 4: Group and Pivot the DataFrame

With the extracted columns, you can now group by the id and pivot on the new column names, thus aggregating the values:

[[See Video to Reveal this Text or Code Snippet]]

Step 5: Drop the ID Column

Finally, since the ID column served its purpose, you can drop it from the final DataFrame to tidy up your data:

[[See Video to Reveal this Text or Code Snippet]]

Final Result

By following these steps, you will obtain a DataFrame structured as desired:

[[See Video to Reveal this Text or Code Snippet]]

Conclusion

By following the outlined steps, you can efficiently explode column values in PySpark, transforming nested data structures into a more manageable and analytically useful format. This technique not only enhances data processing in PySpark but also enables better data analysis and reporting.

Feel free to experiment with this approach on your own DataFrames, and enhance your data manipulation skills in PySpark!

0:07:00

0:07:00

0:00:37

0:00:37

0:02:53

0:02:53

0:02:30

0:02:30

0:01:41

0:01:41

0:18:03

0:18:03

0:03:37

0:03:37

0:01:43

0:01:43

![[Part 2] He](https://i.ytimg.com/vi/pb2ram1kTLY/hqdefault.jpg) 8:34:23

8:34:23

0:01:50

0:01:50

0:00:23

0:00:23

0:04:57

0:04:57

0:01:58

0:01:58

0:01:40

0:01:40

0:12:30

0:12:30

0:01:38

0:01:38

0:00:31

0:00:31

0:14:50

0:14:50

0:05:40

0:05:40

0:01:34

0:01:34

0:01:34

0:01:34

0:01:46

0:01:46

0:01:20

0:01:20

0:10:58

0:10:58